华为云Centos7搭建Hadoop3分布式集群

集群规划

节点名称【主机名】 IP master 170.158.10.101 slave1 170.158.10.102 slave2 170.158.10.103

环境准备

修改主机名

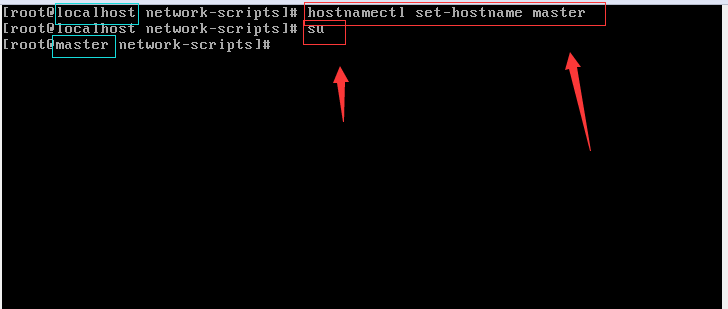

- 修改主机名

在master,slave1,slave2上分别执行

# hostnamectl set-hostname master

# hostnamectl set-hostname slave1

# hostnamectl set-hostname slave2

- su查看

修改主机配置文件

在master上执行

vim /etc/hosts

# 添加以下内容 192.168.0.172 master 119.3.228.195 slave1 123.249.32.173 slave2

在slave1 和slave2上执行类似配置,规则为

在文件/etc/hosts中设置ip与域名的匹配时:

1.在本机上的操作,都要设置成内网ip

2.其它机器上的操作,要设置成外网ip

那么具体的解决办法就是:

1.在Master服务器上,要将自己的ip设置成内网ip,而将另一台Slave服务器的ip设置成外网ip;

2.同样的在Slave服务器上,要将自己的ip设置成内网ip,而将另一台Master服务器的ip设置成外网ip。

参考链接:https://blog.csdn.net/xiaosa5211234554321/article/details/119627974

创建非root用户

在master,slave1,slave2上分别执行

# 2、创建用户,在root用户下 useradd hadoop passwd hadoop

给普通用户添加sudo执行权限,且执行sudo不需要输入密码

chmod -v u+w /etc/sudoers

mode of ‘/etc/sudoers’ changed from 0440 (r--r-----) to 0640 (rw-r-----)

sudo vi /etc/sudoers

在%wheel ALL=(ALL) ALL一行下面添加如下语句: hadoop ALL=(ALL) NOPASSWD: ALL

chmod -v u-w /etc/sudoers

mode of ‘/etc/sudoers’ changed from 0640 (rw-r-----) to 0440 (r--r-----)

关闭防火墙

在master,slave1,slave2上分别执行

systemctl stop firewalld

ssh免密登录

在master,slave1,slave2上以hadoop用户身份分别执行

ssh-keygen -t rsa

执行命令后,连续敲击三次回车键

Generating public/private rsa key pair. Enter file in which to save the key (/root/.ssh/id_rsa): Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /root/.ssh/id_rsa. Your public key has been saved in /root/.ssh/id_rsa.pub. The key fingerprint is: SHA256:DVFWqHDH+Hb+ThEissWHNGNl0NbMpDPJvyTzrZO02/Y root@master The key's randomart image is: +---[RSA 2048]----+ | .+O*+=. | | . o*+*oo+ | | oo+=.O . | | .*oo.= . | | S..oo + | | .=.+ | | o+o.| | .=o.| | .++E| +----[SHA256]-----+

在master,slave1,slave2上以hadoop用户身份分别执行

执行ssh-copy-id命令,执行后,根据提示输入yes,再输入机器登录密码

ssh-copy-id master

ssh-copy-id slave1

ssh-copy-id slave2

安装JDK和hadoop

卸载系统自带的JDK

在master,slave1,slave2上分别执行:

# 查看系统自带的jdk rpm -qa | grep jdk # 卸载找到的jdk yum -y remove 找到的jdk # 或者使用以下的命令删除 rpm -qa | grep -i java | xargs -n1 rpm -e --nodeps

安装jdk

在master,slave1,slave2上分别执行:

安装jdk,安装包可以到官网进行下载:https://www.oracle.com/java/technologies/downloads/#java8-windows

新建软件安装目录

在/home/hadoop目录下,新建一个soft目录,当作安装的软件。

mkdir soft

解压JDK到soft目录

cd /home/hadoop/installfile/

tar -zxvf jdk-8u351-linux-x64.tar.gz -C /home/hadoop/soft

配置环境变量:

在master,slave1,slave2上分别执行

vi /etc/profile:

#JDK1.8 export JAVA_HOME=/home/hadoop/soft/jdk1.8.0_351 export PATH=$PATH:$JAVA_HOME/bin

source /etc/profile

java -version 有如下输出 说明java安装成功。

java version "1.8.0_351" Java(TM) SE Runtime Environment (build 1.8.0_351-b10) Java HotSpot(TM) 64-Bit Server VM (build 25.351-b10, mixed mode)

安装hadoop

在master,slave1,slave2上分别执行:

cd /home/hadoop/installfile

wget http://archive.apache.org/dist/hadoop/core/hadoop-3.1.3/hadoop-3.1.3.tar.gz

tar -zxvf hadoop-3.1.3.tar.gz -C ~/soft

配置环境变量:

vi /etc/profile:

#HADOOP_HOME export HADOOP_HOME=/home/hadoop/soft/hadoop-3.1.3 export PATH=$PATH:$HADOOP_HOME/bin export PATH=$PATH:$HADOOP_HOME/sbin

source /etc/profile

验证是否安装成功:

hadoop version

Hadoop 3.1.3 Source code repository https://gitbox.apache.org/repos/asf/hadoop.git -r ba631c436b806728f8ec2f54ab1e289526c90579 Compiled by ztang on 2019-09-12T02:47Z Compiled with protoc 2.5.0 From source with checksum ec785077c385118ac91aadde5ec9799 This command was run using /root/soft/hadoop-3.1.3/share/hadoop/common/hadoop-common-3.1.3.jar

配置hadoop

在master,slave1,slave2上分别执行:

配置core-site.xml

进入hadoop配置目录

cd $HADOOP_HOME/etc/hadoop

在<configuration> 和</configuration>之间添加如下内容:

<!-- 指定NameNode的地址 -->

<property>

<name>fs.defaultFS</name>

<value>hdfs://master:8020</value>

</property>

<!-- 指定hadoop数据的存储目录 -->

<property>

<name>hadoop.tmp.dir</name>

<value>/home/hadoop/soft/hadoop-3.1.3/data</value>

</property>

<!-- 配置HDFS网页登录使用的静态用户为hadoop -->

<property>

<name>hadoop.http.staticuser.user</name>

<value>hadoop</value>

</property>

<!-- 配置该hadoop(superUser)允许通过代理访问的主机节点 -->

<property>

<name>hadoop.proxyuser.hadoop.hosts</name>

<value>*</value>

</property>

<!-- 配置该hadoop(superUser)允许通过代理用户所属组 -->

<property>

<name>hadoop.proxyuser.hadoop.groups</name>

<value>*</value>

</property>

<!-- 配置该hadoop(superUser)允许通过代理的用户-->

<property>

<name>hadoop.proxyuser.hadoop.groups</name>

<value>*</value>

</property>

配置hdfs-site.xml

vi hdfs-site.xml

<!-- nn web端访问地址-->

<property>

<name>dfs.namenode.http-address</name>

<value>master:59998</value>

</property>

<!-- 2nn web端访问地址-->

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>slave2:9868</value>

</property>

配置yarn-site.xml

vi yarn-site.xml:

<!-- Site specific YARN configuration properties -->

<!-- 指定MR走shuffle -->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<!-- 指定ResourceManager的地址-->

<property>

<name>yarn.resourcemanager.hostname</name>

<value>slave1</value>

</property>

<!-- 环境变量的继承 -->

<property>

<name>yarn.nodemanager.env-whitelist</name>

<value>JAVA_HOME,HADOOP_COMMON_HOME,HADOOP_HDFS_HOME,HADOOP_CONF_DIR,CLASSPATH_PREPEND_DISTCACHE,HADOOP_YARN_HOME,HADOOP_MAPRED_HOME</value>

</property>

<!-- yarn容器允许分配的最大最小内存 -->

<property>

<name>yarn.scheduler.minimum-allocation-mb</name>

<value>512</value>

</property>

<property>

<name>yarn.scheduler.maximum-allocation-mb</name>

<value>2048</value>

</property>

<!-- yarn容器允许管理的物理内存大小 -->

<property>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>2048</value>

</property>

<!-- 关闭yarn对物理内存和虚拟内存的限制检查 -->

<property>

<name>yarn.nodemanager.pmem-check-enabled</name>

<value>false</value>

</property>

<property>

<name>yarn.nodemanager.vmem-check-enabled</name>

<value>false</value>

</property>

<!-- 开启日志聚集功能 -->

<property>

<name>yarn.log-aggregation-enable</name>

<value>true</value>

</property>

<!-- 设置日志聚集服务器地址 -->

<property>

<name>yarn.log.server.url</name>

<value>http://master:19888/jobhistory/logs</value>

</property>

<!-- 设置日志保留时间为7天 -->

<property>

<name>yarn.log-aggregation.retain-seconds</name>

<value>604800</value>

</property>

配置mapred-site.xml

vi mapred-site.xml:

<!-- 指定MapReduce程序运行在Yarn上 -->

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<!-- 历史服务器端地址 -->

<property>

<name>mapreduce.jobhistory.address</name>

<value>master:10020</value>

</property>

<!-- 历史服务器web端地址 -->

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>master:19888</value>

</property>

配置workers

vi workers

master slave1 slave2

注意:该文件中添加的内容结尾不允许有空格,文件中不允许有空行

分发hadoop配置文件到slave1、slave2节点

使用scp命令

格式化文件系统

在master机器上,任意目录输入 hdfs namenode -format 格式化namenode,第一次使用需格式化一次,之后就不用再格式化,如果改一些配置文件了,可能还需要再次格式化

有如下输出 说明成功:

2022-11-27 09:46:02,851 INFO namenode.FSImage: Allocated new BlockPoolId: BP-2006367298-119.3.224.141-1669513562846 2022-11-27 09:46:02,863 INFO common.Storage: Storage directory /root/soft/hadoop-3.1.3/data/dfs/name has been successfully formatted. 2022-11-27 09:46:02,883 INFO namenode.FSImageFormatProtobuf: Saving image file /root/soft/hadoop-3.1.3/data/dfs/name/current/fsimage.ckpt_0000000000000000000 using no compression 2022-11-27 09:46:02,942 INFO namenode.FSImageFormatProtobuf: Image file /root/soft/hadoop-3.1.3/data/dfs/name/current/fsimage.ckpt_0000000000000000000 of size 391 bytes saved in 0 seconds . 2022-11-27 09:46:02,952 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0 2022-11-27 09:46:02,955 INFO namenode.FSImage: FSImageSaver clean checkpoint: txid = 0 when meet shutdown. 2022-11-27 09:46:02,955 INFO namenode.NameNode: SHUTDOWN_MSG: /************************************************************ SHUTDOWN_MSG: Shutting down NameNode at master/119.3.224.141 ************************************************************/

集群启动

启动hdfs

在master机器上执行启动hdfs命令

start-dfs.sh

WARNING: HADOOP_SECURE_DN_USER has been replaced by HDFS_DATANODE_SECURE_USER. Using value of HADOOP_SECURE_DN_USER. Starting namenodes on [master] Last login: Sun Nov 27 10:31:48 CST 2022 from 112.36.201.71 on pts/0 Starting datanodes Last login: Sun Nov 27 10:32:06 CST 2022 on pts/0 Starting secondary namenodes [slave2] Last login: Sun Nov 27 10:32:09 CST 2022 on pts/0

如果出现错误ERROR: but there is no HDFS_NAMENODE_USER defined. Aborting operation

在/home/hadoop/soft/hadoop-3.1.3/sbin/start-dfs.sh 和stop-dfs.sh中添加:

HDFS_DATANODE_USER=root HADOOP_SECURE_DN_USER=hdfs HDFS_NAMENODE_USER=root HDFS_SECONDARYNAMENODE_USER=root

如果出现错误master: ERROR: Cannot set priority of namenode process 5830

可能是由于端口占用所致,也可能是网络通信所致,检查/etc/hosts是否如前面那样配置:

查看日志/home/hadoop/soft/hadoop-3.1.3/logs/hadoop-root-namenode-master.log

2022-11-27 10:04:20,754 INFO org.apache.hadoop.http.HttpServer2: HttpServer.start() threw a non Bind IOException

java.net.BindException: Port in use: master:9870

at org.apache.hadoop.http.HttpServer2.constructBindException(HttpServer2.java:1213)

at org.apache.hadoop.http.HttpServer2.bindForSinglePort(HttpServer2.java:1235)

at org.apache.hadoop.http.HttpServer2.openListeners(HttpServer2.java:1294)

at org.apache.hadoop.http.HttpServer2.start(HttpServer2.java:1149)

at org.apache.hadoop.hdfs.server.namenode.NameNodeHttpServer.start(NameNodeHttpServer.java:181)

at org.apache.hadoop.hdfs.server.namenode.NameNode.startHttpServer(NameNode.java:881)

at org.apache.hadoop.hdfs.server.namenode.NameNode.initialize(NameNode.java:703)

at org.apache.hadoop.hdfs.server.namenode.NameNode.<init>(NameNode.java:949)

at org.apache.hadoop.hdfs.server.namenode.NameNode.<init>(NameNode.java:922)

at org.apache.hadoop.hdfs.server.namenode.NameNode.createNameNode(NameNode.java:1688)

:$

启动历史服务器

根据之前的配置,

由master机器上执行:

mapred --daemon start historyserver

启动yarn

根据之前的配置,

由slave1机器上执行启动yarn命令

start-yarn.sh

Starting resourcemanager Last login: Sun Nov 27 10:43:05 CST 2022 from 112.36.201.71 on pts/0 Starting nodemanagers Last login: Sun Nov 27 10:53:56 CST 2022 on pts/0

如果出现错误

ERROR: Attempting to operate on yarn nodemanager as root ERROR: but there is no YARN_NODEMANAGER_USER defined. Aborting operation.

在/home/hadoop/soft/hadoop-3.1.3/sbin/start-yarn.sh 和stop-yarn.sh上上方分别添加:

YARN_RESOURCEMANAGER_USER=root HADOOP_SECURE_DN_USER=yarn YARN_NODEMANAGER_USER=root

结果验证

进程验证

分别在不同机器执行jps命令

- master节点有NameNode、master或其他节点上有SecondNameNode说明启动成功

- slave1和slave2有DataNode

- slave1有ResourceManager和NodeManager

master上执行jps:

[root@master ~]# jps

14054 JobHistoryServer 14310 Jps 13575 NameNode 14169 NodeManager 794 WrapperSimpleApp 13755 DataNode

[root@slave1 ~]# jps

4017 DataNode 789 WrapperSimpleApp 4743 Jps 4394 NodeManager 4236 ResourceManager

[root@slave2 ~]# jps

5440 Jps 5111 DataNode 5303 NodeManager 5212 SecondaryNameNode 751 WrapperSimpleApp

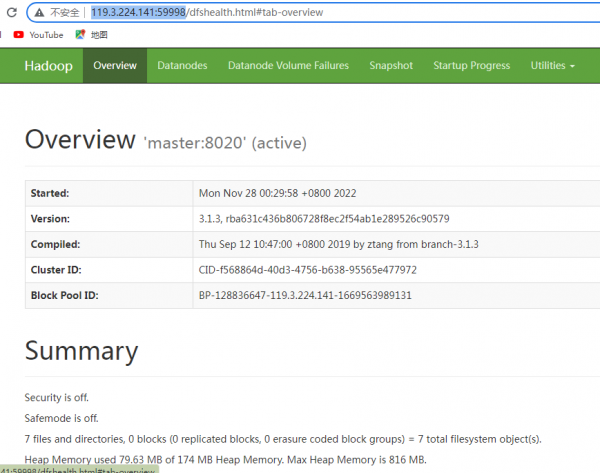

浏览器验证

http://119.3.224.141:59998/ (119.3.224.141为master节点公网IP,验证前 需在华为云安全组上放通59998端口)

参考文档:

[1] https://blog.csdn.net/weixin_52851430/article/details/124499792

[2] https://blog.csdn.net/qq_42881421/article/details/123900255

[3] https://blog.csdn.net/zhouzhiwengang/article/details/94549964