Python爬虫案例:使用Selenium爬取中国制造网

来自CloudWiki

实训介绍

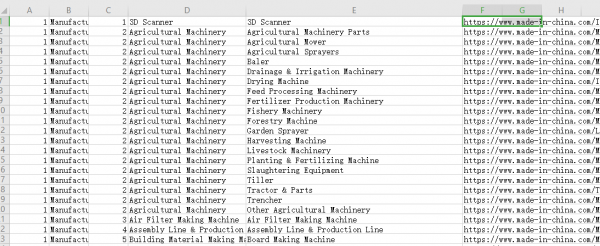

中国制造网的所有一级目录 在这里都能找到:https://www.made-in-china.com/prod/catlist/

但是二级目录 这个页面上显示的不全,需要到这个页面上的"More“链接去访问。

因此 爬取分为两步,先获取各一级目录的链接,再在各一级目录的网页上爬取二级、三级 目录。

实训步骤

爬取一级目录链接

import requests

from bs4 import BeautifulSoup

import time

r = requests.get("https://www.made-in-china.com/prod/catlist/")

r.encoding = 'utf-8'

soup = BeautifulSoup(r.text, "html.parser")

print(soup)

#soup = soup.prettify()

a_list = soup.find_all('a', class_ = 'title-anchor')

title=""

count=1

for i in a_list:

title = i.get_text().strip()

print(str(count)+":"+title)

title =title.replace(" & ","-").replace(", ","-").replace(" ","-")

url = "https://www.made-in-china.com/multi-search" \

+"/catlist/listsubcat/"+title+"-Catalog/2.html#categories"

print(url)

response = requests.get(url)

print(str(count)+": 状态码:",response.status_code)

time.sleep(2)

count +=1

可以把这里的url 保存在csv文件中,方便下一步爬取。

爬取二级、三级目录

这一步读取的madeInChina_title.csv文件就是上一步保存的结果,这一步是到各一级目录上爬取二级、三级目录。

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

from selenium.common.exceptions import TimeoutException

import requests

import re

import time

from bs4 import BeautifulSoup

import csv

#初始参数设置

driver = webdriver.Chrome("C:/Program Files (x86)/Google/Chrome/Application/chromedriver.exe")

wait = WebDriverWait(driver, 10)

#保存的文件名

sub_cat_file='made_in_china_sub_cat.csv'

def get_sub_cat(num ,header1):#num和header1:序号和一级目录

#url ="https://www.made-in-china.com/multi-search/catlist/listsubcat/Industrial-Equipment-Components-Catalog/2.html#categories"

soup = BeautifulSoup(driver.page_source,"html.parser")

#header1 = soup.find('h1',class_ = 'title').get_text().strip()

li_list = soup.find_all('li', class_ = 'items-line-list')

total=""

count=1

print(len(li_list))

#'''

header2=""

for i in li_list:

#title = i.a.get_text().strip()

h2 =i.find('h2',class_ ='sub-title')

if(h2 is not None):

header2 = h2.a.get_text().strip() #更新现在的二级目录

header2_url = "https:"+h2.a['href']

print(str(num)+","+header1+str(count)+",二级目录:"+header2)

print(str(num)+","+header1+str(count)+",二级目录URL:"+header2_url)

h3_list = i.find_all('h3')

if len(h3_list) ==0:#如果仅有二级目录、无三级目录

total += str(num)+","+header1+","+str(count)+","+header2+","+header2+","+header2_url+"\n"

else:

#如果二级目录下有三级目录

for j in h3_list:

header3 = j.a.get_text().strip()

header3_url = "https:"+j.a['href']

print(str(num)+","+header1+","+str(count)+","+","+header2+","+header3+","+header3_url+"\n")

total += str(num)+","+header1+","+str(count)+","+header2+","+header3+","+header3_url+"\n"

if(h2 is not None):

count +=1 #计数器增1

fw = open(sub_cat_file,"a",encoding="utf-8")

fw.write(total)

fw.close()

#根据url模拟访问网站

def browse(url):

try:

driver.get(url)

scroll()

time.sleep(2)#因为网站需验证码,故开始留出60秒的时间人工填验证码

print("访问成功!")

#total = wait.until(EC.presence_of_element_located((By.CSS_SELECTOR,'#mainsrp-pager > div > div > div > div.total')))

#get_products()

#return total.text

return "ok"

except TimeoutException:

return browse(url)

def scroll():#页面滚动函数

for i in range(1,11):

driver.execute_script("window.scrollTo(0,document.body.scrollHeight*"+str(i)+"/10)")

time.sleep(0.25)

#time.sleep(2)

def read_total_category_file():

cat_list =[]#创建类别网址列表

fp =open('madeInChina_title.csv',"rt")

#csv文件每行由num,header1,header1_url 这么几个字段

for line in fp: #文件对象可以直接迭代

d ={}

data =line.split(',')

d['num'] =data[0]

d['header1'] =(data[1])

d['header1_url'] =(data[2])

cat_list.append(d)#将读取的每行信息添加到列表中

fp.close()

return cat_list

def main():

start = time.clock()

#'alibaba_categary.csv'

cat_file =read_total_category_file()

#print(cat_file)

for d in cat_file:

#d中所存字段:num,header1,header1_url

browse(d['header1_url'])

get_sub_cat(d['num'],d['header1'])#得到二级目录

#break;

elapsed = (time.clock() - start)

print("Time used:",elapsed)

#'''

if __name__ == '__main__':

main()

根据二级、三级目录爬取商品列表

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

from selenium.common.exceptions import TimeoutException

from pyquery import PyQuery as pq

import re

import time

from bs4 import BeautifulSoup

import csv

# 初始参数设置

#初始参数设置

driver = webdriver.Chrome("C:/Program Files (x86)/Google/Chrome/Application/chromedriver.exe")

wait = WebDriverWait(driver, 10)

# 保存的时间和文件名

now_time = time.strftime("%Y%m%d%H%M%S", time.localtime())

start_class3 = 'Pneumatic Pipe' # 从这个(三级目录)开始爬取,默认从第一个三级目录开始爬取,可以更改

start_page = 1

end_page =start_page+20#此参数一般不改

file_name ='made in china_'+now_time+"_"+start_class3+"_" \

+str(start_page)+"_"+str(end_page) +'.csv'

begin = 0; # 标记是否开始爬

soup =""

# 根据url模拟访问网站

def browse(url):

try:

driver.get(url)

for i in range(1, 11):

driver.execute_script("window.scrollTo(0,document.body.scrollHeight*" + str(i) + "/10)")

time.sleep(0.25)

# time.sleep(60)#因为网站需验证码,故开始留出60秒的时间人工填验证码

print("访问成功!")

global soup

soup= BeautifulSoup(driver.page_source, "html.parser")

return 0

except Exception as ex:

print("出现如下异常%s" % ex)

return 1

# time.sleep(2)

# 得到商品信息

def get_products(page_number, class1, class2, class3):

# wait.until(EC.presence_of_element_located((By.CSS_SELECTOR,'#mainsrp-itemlist .items .item')))

div_list = soup.find_all('div', class_='list-node')

print(len(div_list))

total = ""

count = 1

for i in div_list:

try:

item_info =""

item_info += "made in china," +str(page_number) + "," # 记录是第几页的信息

item_info += class1 + "," + class2 + "," + class3 + "," # 记录是其分属的1,2,3级目录

# 商品图片信息

img = i.find('img') # 商品图片

print(str(count)+":"+img['src']+",")

item_info += img['src'] + ","#添加图片信息

title = i.find('h2', class_="product-name").a

product_name =title.get_text().strip()

product_name = product_name.replace(",", "_")

product_url = title['href']

#print((str(count)+":"+product_name+","+product_url)+ ",")

item_info += product_name+","+product_url + ","#添加名称和链接

# 价格信息

detail = i.find('div', class_='product-property') \

.find('span')

detail =detail.get_text().strip()

detail =detail.replace(",", "_")

p_property = detail.split("/")

product_price =p_property[0];

product_unit =p_property[1];

#print(str(count)+":"+product_price+","+product_unit)

item_info +=product_price+","+product_unit+","#添加价格和单位

# 商家信息

supplier = i.find('a',class_="compnay-name")

#supplier = i.find('div', class_='pro-extra').span

s_name = supplier.get_text().strip()

s_name = s_name.replace(",", "_")

s_href = 'https:'+supplier['href'];

s_href = s_href.replace(",", "_")

s_nation ="China"

#print(str(count)+":"+s_name+","+s_href+","+s_nation+",")

item_info += s_name + "," + s_href + ","+s_nation+","

print(str(count)+":"+item_info)

# 换行

total +=item_info+"\n"

except Exception as ex:

print("出现如下异常%s" % ex)

count = count + 1

# print(title)

return total #汇集每一页面的所有信息

def read_category_file(start_class3):

cat_list = [] # 创建类别网址列表

fp = open('made_in_china_sub_cat.csv', "rt")# 打开csv文件

# csv文件每行由class1,class2,class3,class3_url 这么几个字段

for line in fp: # 文件对象可以直接迭代

d = {}

data = line.split(',')

d['class1'] = data[1]

d['class2'] = (data[3])

d['class3'] = (data[4])

d['url'] = data[5]

global begin

if d['class3'] == start_class3: # 到达start_class3才开始爬

begin = 1;

elif begin == 0:

continue;

cat_list.append(d) # 将读取的每行信息添加到列表中

fp.close()

return cat_list

def get_category_info(class1, class2, class3, url):

# url ="http://www.alibaba.com/Animal-Products_pid100003006"

# total = int(re.compile('(\d+)').search(total).group(1))

# 爬取所有的数据用total+1

info =""

this_url=url

for i in range(start_page,end_page):#(21,41);(41,61)

#next_page(i)

status =browse(this_url)

if status ==0:#如果能正常打开页面,再去爬取信息

info +=get_products(i,class1,class2,class3)

this_url = next_page(i+1)

if this_url is None:

break;

#将该品类信息写入文件

fw = open(file_name,"a",encoding="utf-8")

fw.write(info)

fw.close()

def next_page(num):

try:

url = soup.find('a',class_="next")

#print(url)

#print(url['href'] is None)

#print(url['href'])

urls=url['href'].split(";")

#print(urls[0])

nu = urls[0].rsplit("-",1)

next_url_header ="https:"+nu[0]

next_url ="https:"+nu[0]+"-"+str(num)+".html"

except Exception as ex:

print("出现如下异常%s" % ex)

next_url =None

return next_url

def main():

start = time.clock()

# 'alibaba_categary.csv'

cat_file = read_category_file(start_class3) # 从start_class3这个三级目录开始读取

for d in cat_file:

# 爬取每一类别的商品信息,class1,class2,class3 分别指代1,2,3级目录

get_category_info(d['class1'], d['class2'], d['class3'], d['url'])

#break;

elapsed = (time.clock() - start)

print("Time used:", elapsed)

# '''

if __name__ == '__main__':

main()