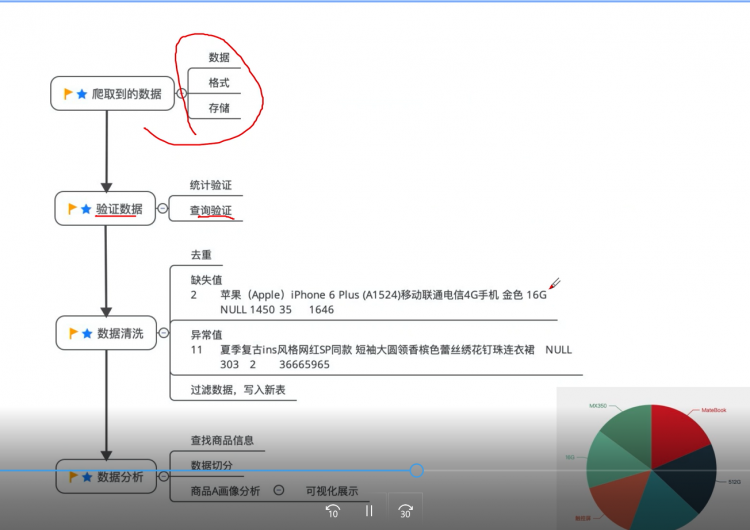

商品数据分析

具体步骤如下

爬取商城数据,并进行分析

数据爬取并保存为scv格式

import requests from lxml import etree data = [] import pandas as pd urls = 'http://880098.cn/index.php?s=/index/goods/index/id/%d.html' for i in range(1,30): page = i url = urls%page response = requests.get(url) html = response.text if "资源不存在或已被删除" in html: print(str(page)+"页无数据") else: print("开始爬"+str(page)+"页") ele = etree.HTML(html) title = ele.xpath('/html/body/div[5]/div[2]/div[2]/div[1]/h1/text()')[0] price = ele.xpath('/html/body/div[5]/div[2]/div[2]/div[2]/div/div[2]/dd/b/text()')[0] sale_num = ele.xpath('/html/body/div[5]/div[2]/div[2]/div[2]/ul/li[1]/div/span[2]/text()')[0] look = ele.xpath('/html/body/div[5]/div[2]/div[2]/div[2]/ul/li[2]/div/span[2]/text()')[0] kucun = ele.xpath('/html/body/div[5]/div[2]/div[2]/div[2]/dl/dd/div[2]/div[3]/form/div[1]/div/dd/span/span/text()')[0] info = { 'id':page, '题目':title,'价格':price, '销售量':sale_num, '浏览量':look, '库存':kucun } data.append(info) fc = pd.DataFrame(data) fc.to_csv("test.csv",index=False, encoding='utf-8')

用xftp上传到指定文件夹

爬取数据示例

1,MIUI/小米 小米手机4 小米4代 MI4智能4G手机包邮 黑色 D-LTE(4G)/TD-SCD,2100,684,0,125

2,苹果(Apple)iPhone 6 Plus (A1524)移动联通电信4G手机 金色 16G,4500.00-6800.00,714,0,1701

3,Samsung/三星 SM-G8508S GALAXY Alpha四核智能手机 新品 闪耀白,3888,546,0,235

4,Huawei/华为 H60-L01 荣耀6 移动4G版智能手机 安卓,1999,630,0,537

5,Meizu/魅族 MX4 Pro移动版 八核大屏智能手机 黑色 16G,2499,1037,,434

6,vivo X5MAX L 移动4G 八核超薄大屏5.5吋双卡手机vivoX5max,2998.9,608,0,319

7,纽芝兰包包女士2018新款潮百搭韩版时尚单肩斜挎包少女小挎包链条,168,482,0,320

8,MARNI Trunk 女士 中号拼色十字纹小牛皮 斜挎风琴包,356,473,0,35

数据清洗

上传数据

hadoop fs -mkdir -p /college hadoop fs -put /root/college/loan.csv /college

创建数据库

create database 库名;

create table 表名1(

id int,

name string,

price int,

views int,

sales int,

stock int

)row format delimited

fields terminated by ',';

导入数据-》hive

load data inpath '/college/loan.csv' into table 表名;

load data [local] inpath ‘/root/data’ into table psn; 表示本地而不是Hadoop

创建新表存储

create table 表名2 like 表名2;

数据过滤

原理如下

insert into 表名2

select * from 表名1

where not id is null and not price is null and not views is null and not sales is null and not stock is null #作用是去除空值,我本来以为这个语句最简单结果用了好多!=等方法都不作用,只有这 种臃肿的语句会正确的过滤空值

and name not like '%包包%' and name not like'%衣%' and name not like'%女士%'#判断不属于电子产品的异常值

group by id,name,price,views,sales,stock; #这个就是去重了

数据分析并下载

INSERT OVERWRITE LOCAL DIRECTORY '/root/college022/’

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t’

SELECT count(distinct author) FROM data;

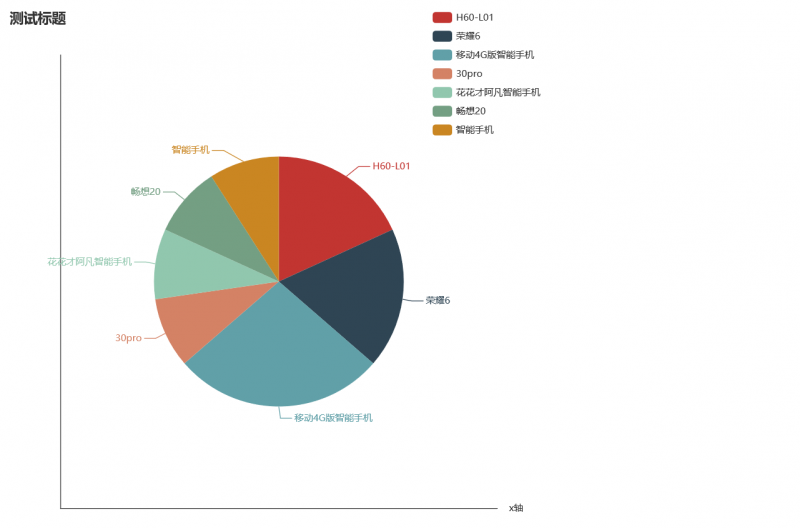

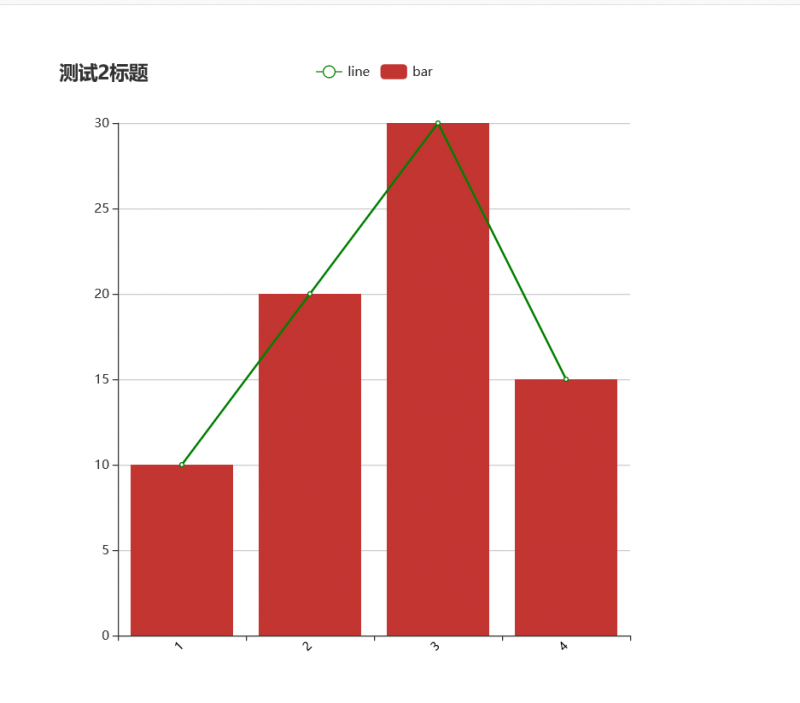

数据可视化

数据切分

select split(name, '\ ') from data where split(name, '\ ')[0]='Huawei/华为';

切分之后,通过简单计数,就可做可视化完成这一部分的题目

data = []

with open("sss.txt", "r", encoding='utf-8') as f:

for i in f.readlines():

data += i.split("�")

dict = {}

for key in data:

dict[key] = dict.get(key, 0) + 1

print(dict.values())

print(dict)