基于ElasticSearch爬虫引擎

目录

Vue前端

== 1.利用axios对接elasticsearch接口 ==

① 设置api接口地址

let base = 'http://127.000.00.00:9200'; //设置对接地址

export const getAvailable = params =>{

return axios.post(`${base}/pachong/_doc/_search?pretty&size=1000`, params)

}

②在需要对接的页面内引入api

import { getAvailable } from '../../../api/api'

接收接口数据

getAvail() {

var that = this

getAvailable().then((response)=> {

console.log(response.data)

this.$store.commit('Avail',response.data) //通过vuex状态管理将数据布置到全局,以便调用和获取

}).catch(function (error) {

console.log(error);

});

},

vuex设置

1.安装vuex状态管理工具

2.建立store文件夹

3.建立index.js文件

import Vue from 'vue'

import Vuex from 'vuex'// 告诉 vue “使用” vuex

Vue.use(Vuex)// 创建一个对象来保存应用启动时的初始状态// 需要维护的状态

const store = new Vuex.Store({

state: {

// 放置初始状态 app启动的时候的全局的初始值

MemoryData: [],// 数据存放位置

/**

* admin

admins

id

invitation

member

nick

*/

},

mutations: {

getMemory: function (state, msg) { // 操作数据

state.MemoryData = msg

},

},

})

// 整合初始状态和变更函数,我们就得到了我们所需的 store

// 至此,这个 store 就可以连接到我们的应用中

export default store

调用vuex中的数据

this.$store.state.AvailData

接下来就是根据数据的具体格式和信息进行数据的更改,

对数据进行操作了

ElasticSearch后端

安装部署

csv文件上传到Elsearch

1.安装Logstash

解压软件包:tar -zxvf logstash-7.3.2.tar.gz

将安装包移动到 mv logstash-7.3.2 /usr/local/

cd /usr/local/

重命名: mv logstash-7.3.2 logstash

进入配置文件目录:/usr/local/logstash/config

logstash配置文件编写:

爬虫格式(页面一)

来源

位置

机器配置

记录数

错误日志数量

格式:("source","Location","Email","Machine","configuration","Number of records","Number of error logs")

编写logstash配置abc-pc.conf:

input {

file {

path => ["/root/ccc.txt"]

start_position => "beginning"

}

}

filter {

csv {

separator => ","

columns => ["source","Location","Email","Machineconfiguration","Numberofrecords","Numberoferrorlogs"]

}

}

output {

elasticsearch {

hosts => ["master:9200"]

index => "pachong"

}

}

执行logstash: ./bin/logstash -f config/abc.conf --path.data=/root/log

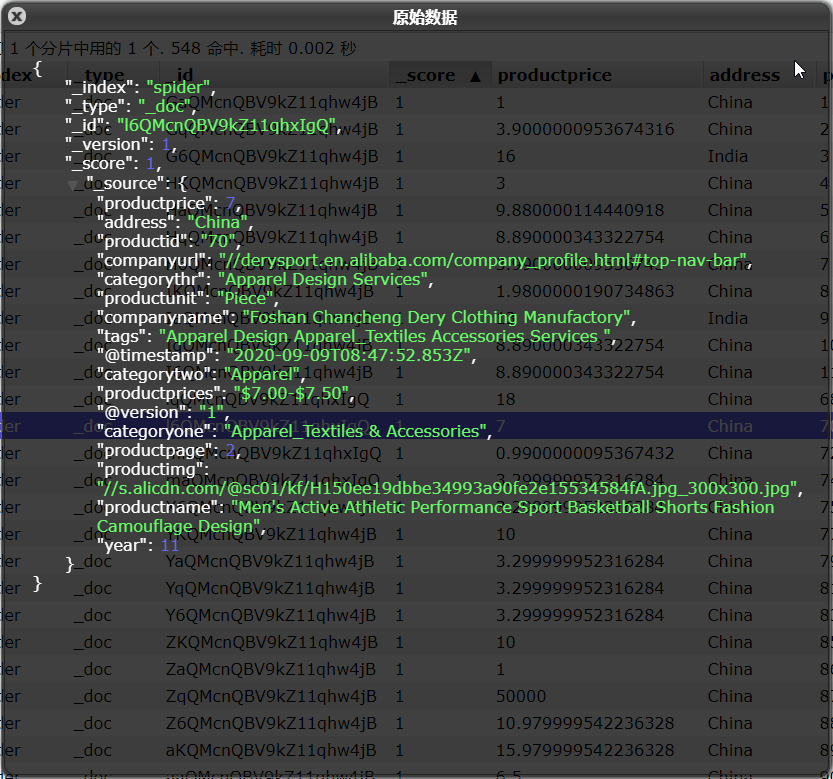

效果图:

爬虫格式(页面二)

进行中数:

已完成数:

待完成数:

资源总进度:

任务名称:

任务状态:

格式:("In progress:","Completed number:","Number to be completed:","Total progress of resources:","Task name","Task status:")

编写logstash配置abc-pcwcjd.conf:

input {

file {

path => ["/root/ooo.txt"]

start_position => "beginning"

}

}

filter {

csv {

separator => ","

columns => ["In progress:","Completed number:","Number to be completed:","Total progress of resources:","Task name","Task status:"]

}

}

output {

elasticsearch {

hosts => ["master:9200"]

index => "pachongzhuangtai"

}

}

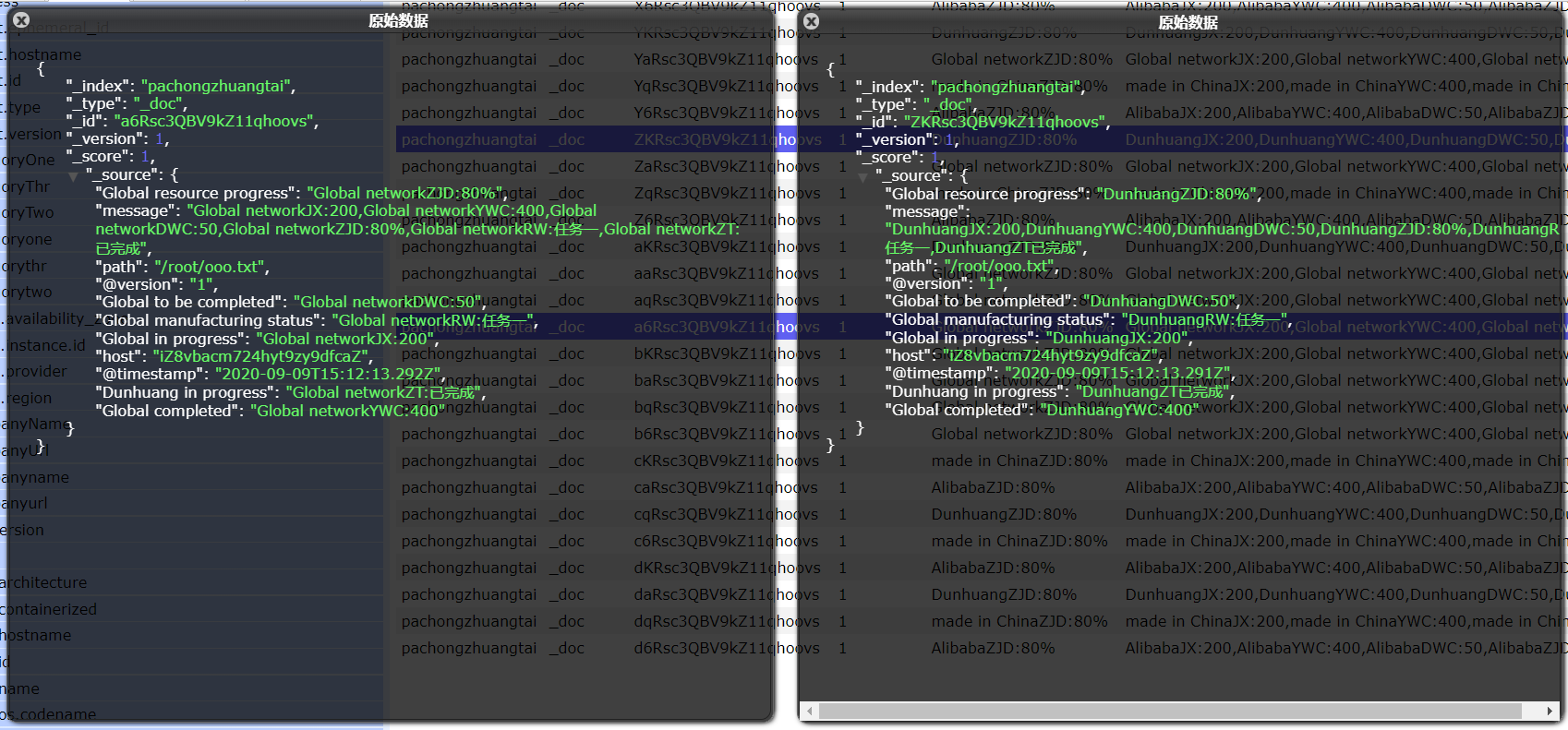

执行logstash: ./bin/logstash -f config/abc-pcwcjd.conf --path.data=/root/pcjd

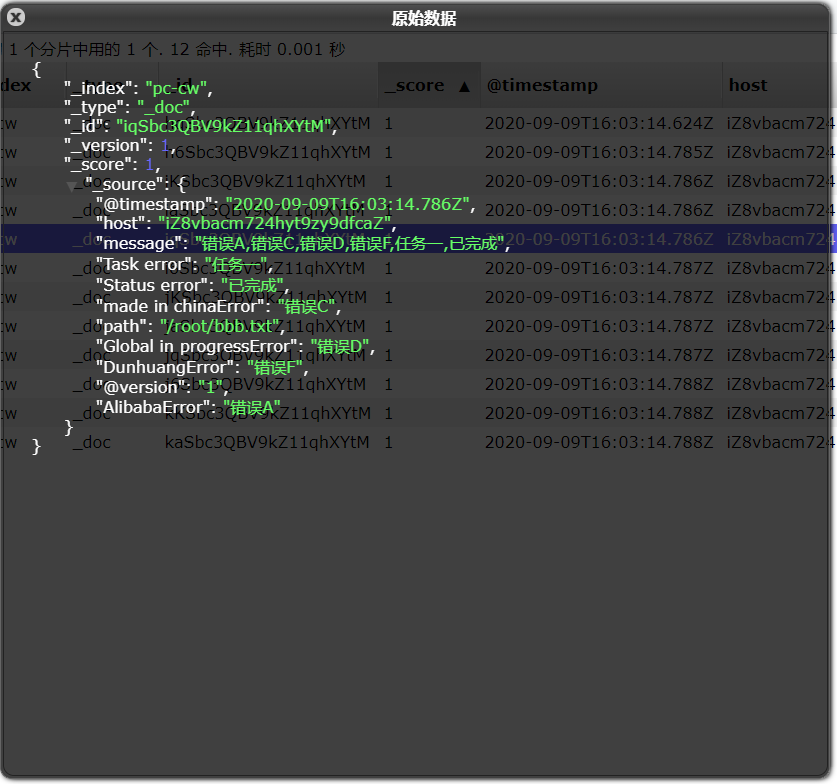

效果图:

爬虫格式(页面三)

阿里巴巴错误分布:

中国制造错误分布:

敦煌错误分布:

爬虫任务分布:

爬虫状态分布:

格式:("AlibabaError","made in chinaError","Global in progressError","DunhuangError","Task error","Status error")

编写logstash配置abc-cw.conf:

input {

file {

path => ["/root/bbb.txt"]

start_position => "beginning"

}

}

filter {

csv {

separator => ","

columns => ["AlibabaError","made in chinaError","Global in progressError","DunhuangError","Task error","Status error"]

}

}

output {

elasticsearch {

hosts => ["master:9200"]

index => "pc-cw"

}

}