采集分析Discuz论坛上的数据

来自CloudWiki

.

目标环境

- Discuz!是目前国内知名的开源 php 社交系统。它的基础架构采用 PHP+MySQL 实现;适用于各种服务器环境的高效论坛系统。直接访问目标站点 ip 即可进入论坛主页。论坛的默认模块包含 5800+条主 题帖及 1700+条回复帖,共计 7500+条有效回复内容;包含 550+会员。其中涉及到的信息包含:论坛版块、发帖人、回帖人、发帖人 ID、发帖人 名称、回帖人 ID、回帖人名称、用户头像、发帖内容、回帖内容、发帖 ID、回 帖 ID 等。

逻辑图

逻辑关系为: (一)论坛版块对应多个帖子 (二)用户对应多个发帖 (三)用户对应多个回帖 (四)发帖对应多个回帖 (五)发帖包含:发帖 id、发帖标题、发帖内容、发帖人 id (六)回帖包含:发帖 id、回帖 id、回帖内容、回帖人 id (七)用户包含:用户 id、名称、头像

操作方法

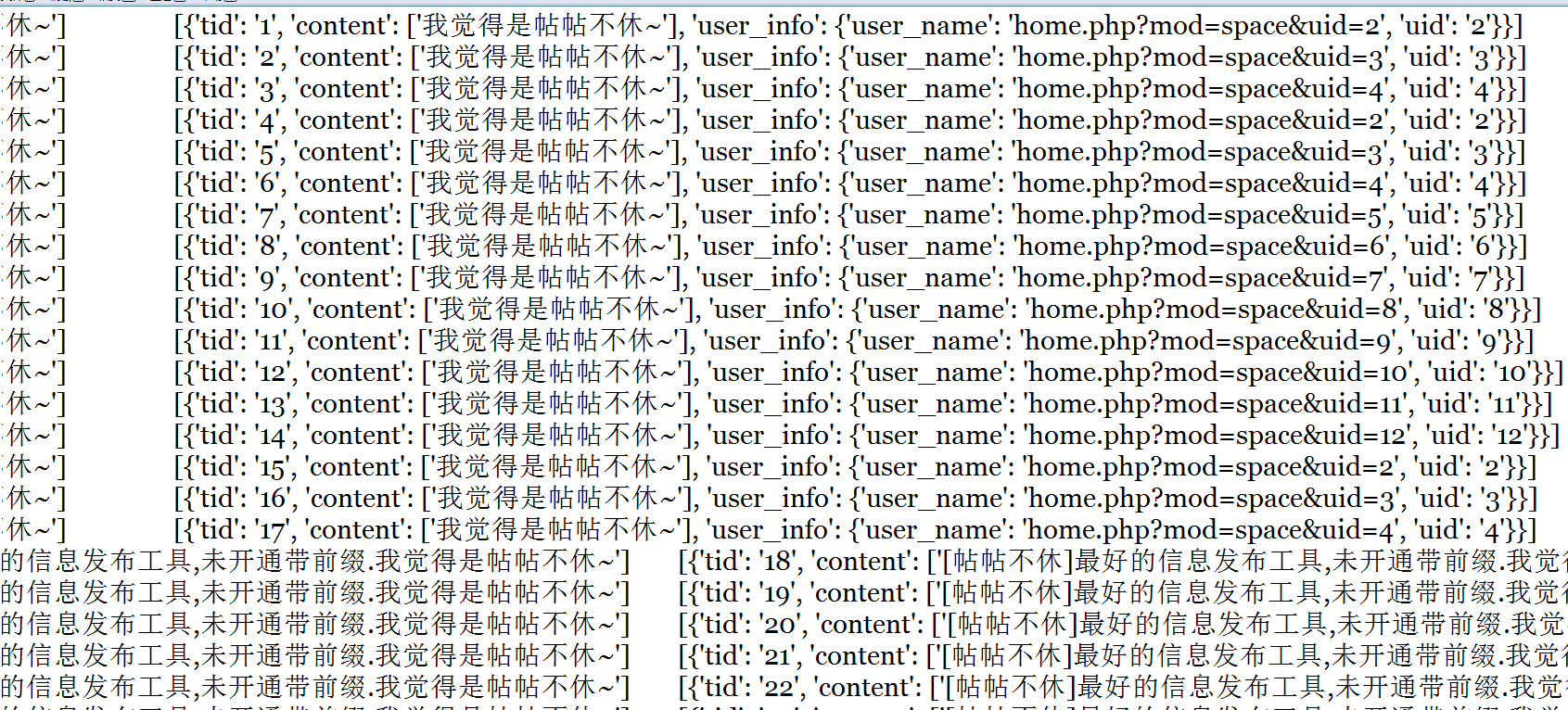

代码实现

import requests

from bs4 import BeautifulSoup

from urllib.parse import urlparse

from urllib.parse import parse_qs

import sys

import csv

# 获取网页源代码

def get_url_content(url):

response = requests.get(url)

if response.status_code == 200:

if "抱歉,指定的主题不存在或已被删除或正在被审核" in response.text:

return False

else:

return response.text

else:

return False

def parse_post_data(html_text):

soup_object = BeautifulSoup(html_text, 'lxml')

title = soup_object.title.string

url = soup_object.link['href']

parsed_url = urlparse(url)

query_string_object = parse_qs(parsed_url.query)

tid = query_string_object['tid'][0]

user_list = get_post_userlist(soup_object)

content_list = get_post_content_list(soup_object)

for i in range(len(content_list)):

content_list[i]["user_info"] = user_list[i]

post_content_info = {

'title':title,

'url':url,

'tid':tid,

'author':user_list[0],

'content':content_list[0]['content'],

'comments':content_list

}

return post_content_info

def get_post_userlist(post_soup_object):

user_info_doms = post_soup_object.select(".authi")

user_list = []

for i in range(len(user_info_doms)):

if i % 2 == 0:

user_name = user_info_doms[i].a['href']

uid = parse_qs(user_info_doms[i].a['href'])['uid'][0]

user_list.append({"user_name": user_name, "uid": uid})

return user_list

def get_post_content_list(post_soup_object):

content_object_list = post_soup_object.select('.t_f')

content_list = []

for i in range(len(content_object_list)):

postmessage_id = content_object_list[i]['id']

tid = postmessage_id.split('_')[1]

content = content_object_list[i].text.split()

content_list.append({"tid":tid,"content":content})

return content_list

def main():

max_tid = input('输入最大文章')

heads = ['title', 'url', 'tid', 'author', 'content', 'comments']

for page in range(int(max_tid)):

url = 'http://114.112.74.138/forum.php?mod=viewthread&tid='+str(page)+'&extra=page%3D3'

ret = get_url_content(url)

if ret != False:

row = ''

data = parse_post_data(ret)

for item in heads:

row = row + str(data.get(item)) +'\t'

row = row+'\n'

with open('hongya.txt','a+') as f:

f.write(row)

else:

print("not found")

if __name__ == '__main__':

main()