2021高寻真源:Spark安装

安装Scala

Scala下载并解压缩

Note:Note that, Spark 2.x is pre-built with Scala 2.11 except version 2.4.2, which is pre-built with Scala 2.12. Spark 3.0+ is pre-built with Scala 2.12.

下载地址 https://www.scala-lang.org/download/2.12.13.html

scala-2.12.13.tgz

将下载的二进制包移动到目录,解压缩文件包至BD

设置环境变量

gedit ~/.bashrc

打开后,在文档最下方添加如下配置:

export SCALA_HOME=/home/maxin/BD/scala-2.12.13 export PATH=$SCALA_HOME/bin:$PATH:

保存退出,执行如下命令,使更改生效

source ~/.bashrc

检查SCALA是否安装成功

输入 scala -version

Scala code runner version 2.11.12 -- Copyright 2002-2017, LAMP/EPFL

如果看到scala版本信息,即安装成功

或者输入 scala

如果看到scala版本及相关信息,即安装成功

Welcome to Scala 2.11.12 (Java HotSpot(TM) 64-Bit Server VM, Java 1.8.0_181). Type in expressions for evaluation. Or try :help.

就可以执行scala命令

Spark下载

使用国内的镜像地址:

https://mirrors.tuna.tsinghua.edu.cn/apache/spark/spark-3.0.1/

spark-3.0.1-bin-hadoop2.7.tgz

Spark配置

maxin@maxin-virtual-machine:~$ cd BD

maxin@maxin-virtual-machine:~/BD$ ls

scala-2.12.13 spark-3.0.1-bin-hadoop2.7 scala-2.12.13.tgz spark-3.0.1-bin-hadoop2.7.tgz

maxin@maxin-virtual-machine:~/BD$ cd spark-3.0.1-bin-hadoop2.7/

maxin@maxin-virtual-machine:~/BD/spark-3.0.1-bin-hadoop2.7$ ls

bin data jars LICENSE NOTICE R RELEASE yarn conf examples kubernetes licenses python README.md sbin

maxin@maxin-virtual-machine:~/BD/spark-3.0.1-bin-hadoop2.7$ cd conf

maxin@maxin-virtual-machine:~/BD/spark-3.0.1-bin-hadoop2.7/conf$ ls

fairscheduler.xml.template slaves.template log4j.properties.template spark-defaults.conf.template metrics.properties.template spark-env.sh.template

maxin@maxin-virtual-machine:~/BD/spark-3.0.1-bin-hadoop2.7/conf$ cp spark-env.sh.template spark-env.sh

gedit spark-env.sh

SPARK_LOCAL_IP=127.0.0.1 JAVA_HOME=/usr/lib/jvm/java-8-oracle/jdk1.8.0_221 HADOOP_CONF_DIR=/home/maxin/hadoop-2.7.6/etc/hadoop/ YARN_CONF_DIR=/home/maxin/hadoop-2.7.6/etc/hadoop/ LD_LIBRARY_PATH=$HADOOP_HOME/lib/native/

设置环境变量

gedit ~/.bashrc

打开后,在文档最下方添加如下配置:

export SPARK_HOME=/home/maxin/BD/spark-3.0.1-bin-hadoop2.7 export PATH=$PATH:$SPARK_HOME/bin

保存退出,执行如下命令,使更改生效

source ~/.bashrc

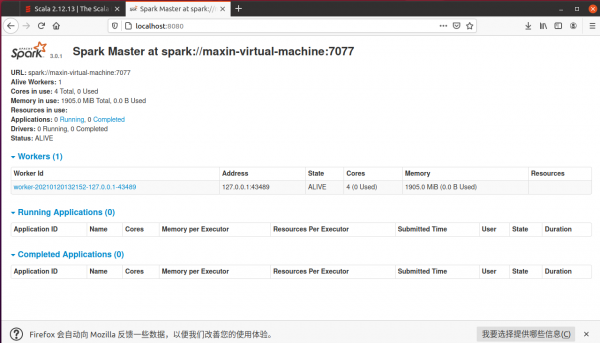

启动并检查

maxin@maxin-virtual-machine:~/BD/spark-3.0.1-bin-hadoop2.7/conf$ cd /home/maxin/BD/spark-3.0.1-bin-hadoop2.7

maxin@maxin-virtual-machine:~/BD/spark-3.0.1-bin-hadoop2.7$ ls

bin data jars LICENSE NOTICE R RELEASE yarn conf examples kubernetes licenses python README.md sbin

maxin@maxin-virtual-machine:~/BD/spark-3.0.1-bin-hadoop2.7$ sbin/start-all.sh

starting org.apache.spark.deploy.master.Master, logging to /home/maxin/BD/spark-3.0.1-bin-hadoop2.7/logs/spark-maxin-org.apache.spark.deploy.master.Master-1-maxin-virtual-machine.out localhost: starting org.apache.spark.deploy.worker.Worker, logging to /home/maxin/BD/spark-3.0.1-bin-hadoop2.7/logs/spark-maxin-org.apache.spark.deploy.worker.Worker-1-maxin-virtual-machine.out

maxin@maxin-virtual-machine:~/BD/spark-3.0.1-bin-hadoop2.7$ jps

10976 Master 8353 DataNode 11203 Jps 11109 Worker 8549 SecondaryNameNode 8812 NodeManager 8684 ResourceManager 8189 NameNode