PySpark实战:Windows搭建Spark环境

安装Java

java -version

java version "1.8.0_45" Java(TM) SE Runtime Environment (build 1.8.0_45-b15) Java HotSpot(TM) 64-Bit Server VM (build 25.45-b02, mixed mode)

C:\Users\maxin>javac

用法: javac <options> <source files>

其中, 可能的选项包括:

-g 生成所有调试信息

-g:none 不生成任何调试信息

-g:{lines,vars,source} 只生成某些调试信息

-nowarn 不生成任何警告

安装Python3.7

C:\Users\maxin>python -V

Python 3.7.6

安装Hadoop2.7

下载hadoop2.7:

https://archive.apache.org/dist/hadoop/common/hadoop-2.7.7/hadoop-2.7.7.tar.gz

解压hadoop2.7到D盘

如果解压提示错误,按照这篇文章的讲解,以管理员身份运行解压软件就可以:

https://blog.csdn.net/qq_42000661/article/details/117396954

配置Hadoop2.7

下载windows的支持文件:

https://github.com/steveloughran/winutils

hadoop主要基于linux编写,winutil.exe主要用于模拟linux下的目录环境

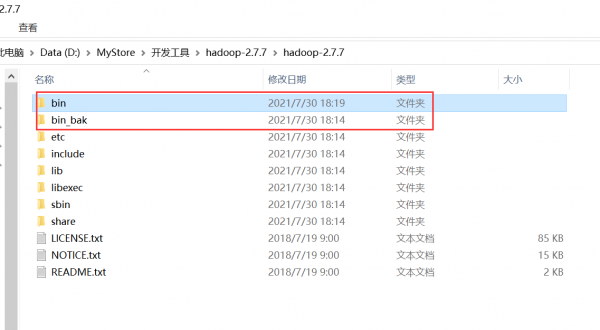

在里面找到hadoop2.7版本,将其下面的bin文件夹复制到hadoop文件夹内

原来的bin文件夹改名为:bin_bak

拓展:github上下载整个文件夹:复制GitHub网址输入到这个网站Gitzip下载:http://kinolien.github.io/gitzip/

修改hadoop-2.7.7\etc\hadoop下的hadoop-env.cmd文件,将原来的配置改为:

set JAVA_HOME= D:\Java\jdk1.8.0_45

注意,这里JAVA_HOME如果是C盘的或 路径里面带空格的,可能不会成功,可把原先的java文件夹复制到D盘根目录下

为了使在任何目录下都执行hadoop相关命令,可以新建一个HADOOP_HOME的环境变量,变量值为hadoop解压目录,如D:\MyStore\开发工具\hadoop-2.7.7\hadoop-2.7.7

然后编辑Path环境变量,添加变量值为%HADOOP_HOME%\bin

此后就可以在cmd中直接运行hadoop命令了

结果输出

C:\Users\maxin>hadoop version

Hadoop 2.7.7 Subversion Unknown -r c1aad84bd27cd79c3d1a7dd58202a8c3ee1ed3ac Compiled by stevel on 2018-07-18T22:47Z Compiled with protoc 2.5.0 From source with checksum 792e15d20b12c74bd6f19a1fb886490 This command was run using /D:/MyStore/开发工具/hadoop-2.7.7/hadoop-2.7.7/share/hadoop/common/hadoop-common-2.7.7.jar

安装Spark2.4.5

下载Spark: https://archive.apache.org/dist/spark/spark-2.4.5/

将压缩包spark-2.4.5解压到同名文件夹

新建环境变量名为SPARK_HOME ,变量值为D:\MyStore\spark-2.4.5-bin-hadoop2.7

编辑Path环境变量,添加变量值为%SPARK_HOME%\bin

验证

打开命令行窗口,输入pyspark

C:\Users\maxin>pyspark

Python 3.7.6 (tags/v3.7.6:43364a7ae0, Dec 19 2019, 00:42:30) [MSC v.1916 64 bit (AMD64)] on win32

Type "help", "copyright", "credits" or "license" for more information.

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/__ / .__/\_,_/_/ /_/\_\ version 2.4.5

/_/

Using Python version 3.7.6 (tags/v3.7.6:43364a7ae0, Dec 19 2019 00:42:30)

SparkSession available as 'spark'.

>>>

输入quit()命令,即可退出pyspark交互环境

执行命令: spark-submit %SPARK_HOME%/examples/src/main/python/pi.py

输出:Pi is roughly 3.140440

调整日志级别

把spark-2.4.5-bin-hadoop2.7\conf中的log4j.properpties.template文件改名为log4j.properpties

并调整日志级别为

log4j.rootCategory=ERROR, console

再次运行,会发现输出少了很多:

C:\Users\maxin> spark-submit %SPARK_HOME%/examples/src/main/python/pi.py

Pi is roughly 3.140380