Python爬虫案例:使用Requests爬取豆瓣电影榜单

来自CloudWiki

实训目的

爬取豆瓣电影榜单 最受欢迎的前9000部电影

https://movie.douban.com/tag/#/?sort=U&range=0,10&tags=%E7%94%B5%E5%BD%B1

数据爬取

网址规律探究

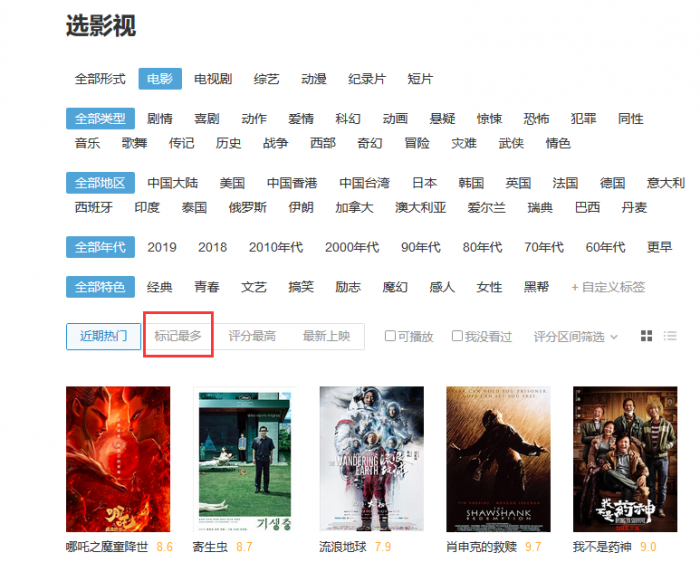

听说看的人越多,评分越有说服力,所以我们进入导航页,选择“标记最多”。(虽然标记的多并不完全等于看的多,但也差不多了)

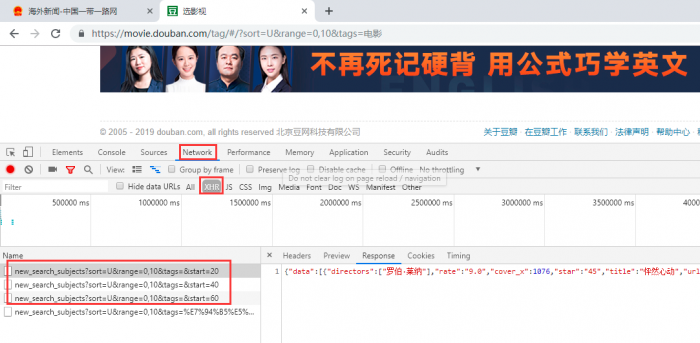

要找到网址变化规律,常规的套路就是先右键“审查元素”,然后通过不断的点击“加载更多”刷新页面的方式来找规律。

网址规律异常的简单,开头URL不变,每翻一页,start的数值增加20就OK了。

一页是20部电影,开头我们立下的FLAG是要爬取9000部电影,也就是爬取450页。

单页解析+循环爬取

豆瓣灰常贴心,每一页都是JSON格式存储的规整数据,爬取和清洗都省了不少事儿:

单页解析的代码

import requests

import pandas as pd

import json

import time

import random

def parse_base_info(url,headers):

html = requests.get(url,headers = headers)

bs = json.loads(html.text)

df = pd.DataFrame()

for i in bs['data']:

casts = i['casts'] #主演

cover = i['cover'] #海报

directors = i['directors'] #导演

m_id = i['id'] #ID

rate = i['rate'] #评分

star = i['star'] #标记人数

title = i['title'] #片名

url = i['url'] #网址

cache = pd.DataFrame({'主演':[casts],'海报':[cover],'导演':[directors],

'ID':[m_id],'评分':[rate],'标记':[star],'片名':[title],'网址':[url]})

df = pd.concat([df,cache])

return df

然后我们写一个循环,构造所需的450个基础网址:

#你想爬取多少页,其实这里对应着加载多少次

def format_url(num):

urls = []

base_url = 'https://movie.douban.com/j/new_search_subjects?sort=T&range=0,10&tags=%E7%94%B5%E5%BD%B1&start={}'

for i in range(0,20 * num,20):

url = base_url.format(i)

urls.append(url)

return urls

urls = format_url(450)

两个凑一起,跑起来:

result = pd.DataFrame()

#看爬取了多少页

count = 1

headers = {'User-Agent':'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/63.0.3239.132 Safari/537.36'}

for url in urls:

if count <= 999:

count += 1

continue

else:

df = parse_base_info(url,headers = headers)

result = pd.concat([result,df])

time.sleep(random.random() + 5)

print('I had crawled page of:%d' % count)

if count%50 == 0:

result.to_csv(r"douban"+str(count)+".csv",mode = 'a',index =False)

result = pd.DataFrame()

count += 1

一个大号的功夫,包含电影ID、电影名称、主演、导演、评分、标记人数和具体网址的数据已经爬好了:

下面,我们还想要批量访问每一部电影,拿到有关电影各星级评分占比等更丰富的信息,后续我们想结合评分分布来进行排序。

单部电影详情爬取

我们打开单部电影的网址,取巧做法是直接右键,查看源代码,看看我们想要的字段在不在源代码中,毕竟,爬静态的源代码是最省力的。

电影名称?在的!导演信息?在的!豆瓣评分?还是在的!一通CTRL+F搜索发现,我们所有需要的字段,全部在源代码中。那爬取起来就太简单了,这里我们用xpath来解析:

import requests

import pandas as pd

import json

import time

import random

import pandas as pd

import numpy as np

import csv

from lxml import etree

headers = {'User-Agent':'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/63.0.3239.132 Safari/537.36'}

def parse_movie_info(url,headers = headers,ip = ''):

if ip == '':

html = requests.get(url,headers = headers)

else:

html = requests.get(url,headers = headers,proxies = ip)

bs = etree.HTML(html.text)

#片名

title = bs.xpath('//div[@id = "wrapper"]/div/h1/span')[0].text

#上映时间

year = bs.xpath('//div[@id = "wrapper"]/div/h1/span')[1].text

#电影类型

m_type = []

for t in bs.xpath('//span[@property = "v:genre"]'):

m_type.append(t.text)

a = bs.xpath('//div[@id= "info"]')[0].xpath('string()')

#片长

m_time =a[a.find('片长: ') + 4:a.find('分钟\n')] #时长

#地区

area = a[a.find('制片国家/地区:') + 9:a.find('\n 语言')] #地区

#评分人数

try:

people = bs.xpath('//a[@class = "rating_people"]/span')[0].text

#评分分布

rating = {}

rate_count = bs.xpath('//div[@class = "ratings-on-weight"]/div')

for rate in rate_count:

rating[rate.xpath('span/@title')[0]] = rate.xpath('span[@class = "rating_per"]')[0].text

except:

people = 'None'

rating = {}

#简介

try:

brief = bs.xpath('//span[@property = "v:summary"]')[0].text.strip('\n \u3000\u3000')

except:

brief = 'None'

try:

hot_comment = bs.xpath('//div[@id = "hot-comments"]/div/div/p/span')[0].text

except:

hot_comment = 'None'

cache = pd.DataFrame({'片名':[title],'上映时间':[year],'电影类型':[m_type],'片长':[m_time],

'地区':[area],'评分人数':[people],'评分分布':[rating],'简介':[brief],'热评':[hot_comment],'网址':[url]})

return cache

#主程序

movie_result = pd.DataFrame()

#ip = '' #这里构建自己的IP池

count2 = 1

cw = 1

df = pd.read_csv(r'douban_full.csv',low_memory=False)

for index,row in df.iterrows():

#print(row['片名'],row['网址'],type(row['片名']),type(row['网址']))

url = row['网址']

name = row['片名']

if count2 <= 0:#断点续传

count2 += 1

continue

try:

cache = parse_movie_info(url,headers = headers)

#print(cache)

movie_result = pd.concat([movie_result,cache])

time.sleep(random.random()+2)

print('我们爬取了第:%d部电影-------%s' % (count2,name))

if count2 % 30 == 0:

movie_result.to_csv(r"douban_detail.csv",mode = 'a',index =False)

movie_result = pd.DataFrame()

count2 += 1

except Exception as e:

print('滴滴滴滴滴,第{}次报错'.format(cw))

print(e)

#print('ip is:{}'.format(ip))

cw += 1

time.sleep(100)

continue