Tensorflow案例:车牌识别代码内容与注释

来自CloudWiki

目录

车牌定位、矫正、筛选

Pycharm中 ,选择python3.5 版本的解释器,下同

需要导入的库

import cv2 as cv import numpy as np from PIL import Image import matplotlib.pyplot as plt import tensorflow as tf

sobel边缘检测

HSV颜色定位

Sobel与HSV综合定位

偏斜车牌矫正

筛选模型训练(单独新建一个训练)

图片导入及处理

import cv2

import numpy as np

import matplotlib.pyplot as plt

from PIL import Image

import os

import random

import tensorflow as tf

#我们将所有输入的图片,高度统一为60,宽度统一为160

IMAGE_HEIGHT = 60

IMAGE_WIDTH = 160

type_num = 2

#随机得到图片(转矩阵)以及标签:输入-文件夹地址

def get_plate(file_dir):

path,label = RandomDir(file_dir)

filelist=os.listdir(path)

index=random.randint(0, len(filelist)-1)

filename=filelist[index]

file_path = os.path.join(path, filename)

img=Image.open(file_path).convert("RGB")

#转换为高60宽160的统一格式

img = img.resize((IMAGE_WIDTH, IMAGE_HEIGHT))

img = np.array(img)

# 灰度

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

# OTUS二值化

ret3, th3 = cv2.threshold(gray , 0, 255, cv2.THRESH_BINARY + cv2.THRESH_OTSU)

plate_image=np.array(th3)

return label,plate_image

生成一个训练batch

# 生成一个训练batch

def get_next_batch(batch_size=20):

batch_x = np.zeros([batch_size, IMAGE_HEIGHT * IMAGE_WIDTH])

batch_y = np.zeros([batch_size, 1 * type_num])

# 有时生成图像大小不是(60, 160, 3)

def wrap_gen_captcha_text_and_image():

#while True:

text, image = get_plate('./CNN/')

#if image.shape == (60, 160, 3):

return text, image

for i in range(batch_size):

text, image = wrap_gen_captcha_text_and_image()

#image = convert2gray(image)

batch_x[i, :] = image.flatten() / 255 # (image.flatten()-128)/128 mean为0

vector = np.zeros(1 * type_num)

dirlist=os.listdir('./CNN/')

if text in dirlist:

vector[dirlist.index(text)]=1

batch_y[i, :] = vector

return batch_x, batch_y

def RandomDir(rootDir):

filelist=os.listdir(rootDir)

index=random.randint(0, len(filelist)-1)

filename=filelist[index]

filepath = os.path.join(rootDir, filename)

return filepath,filename

定义CNN

X = tf.placeholder(tf.float32, [None, IMAGE_HEIGHT * IMAGE_WIDTH])

Y = tf.placeholder(tf.float32, [None, 1 * type_num])

keep_prob = tf.placeholder(tf.float32) # dropout

# 定义CNN

def crack_captcha_cnn(w_alpha=0.01, b_alpha=0.1):

x = tf.reshape(X, shape=[-1, IMAGE_HEIGHT, IMAGE_WIDTH, 1])

# w_c1_alpha = np.sqrt(2.0/(IMAGE_HEIGHT*IMAGE_WIDTH)) #

# w_c2_alpha = np.sqrt(2.0/(3*3*32))

# w_c3_alpha = np.sqrt(2.0/(3*3*64))

# w_d1_alpha = np.sqrt(2.0/(8*32*64))

# out_alpha = np.sqrt(2.0/1024)

# 3 conv layer

w_c1 = tf.Variable(w_alpha * tf.random_normal([3, 3, 1, 32]))

b_c1 = tf.Variable(b_alpha * tf.random_normal([32]))

conv1 = tf.nn.relu(tf.nn.bias_add(tf.nn.conv2d(x, w_c1, strides=[1, 1, 1, 1], padding='SAME'), b_c1))

conv1 = tf.nn.max_pool(conv1, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME')

conv1 = tf.nn.dropout(conv1, keep_prob)

w_c2 = tf.Variable(w_alpha * tf.random_normal([3, 3, 32, 64]))

b_c2 = tf.Variable(b_alpha * tf.random_normal([64]))

conv2 = tf.nn.relu(tf.nn.bias_add(tf.nn.conv2d(conv1, w_c2, strides=[1, 1, 1, 1], padding='SAME'), b_c2))

conv2 = tf.nn.max_pool(conv2, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME')

conv2 = tf.nn.dropout(conv2, keep_prob)

w_c3 = tf.Variable(w_alpha * tf.random_normal([3, 3, 64, 64]))

b_c3 = tf.Variable(b_alpha * tf.random_normal([64]))

conv3 = tf.nn.relu(tf.nn.bias_add(tf.nn.conv2d(conv2, w_c3, strides=[1, 1, 1, 1], padding='SAME'), b_c3))

conv3 = tf.nn.max_pool(conv3, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME')

conv3 = tf.nn.dropout(conv3, keep_prob)

# Fully connected layer

w_d = tf.Variable(w_alpha * tf.random_normal([8 * 32 * 40, 1024]))

b_d = tf.Variable(b_alpha * tf.random_normal([1024]))

dense = tf.reshape(conv3, [-1, w_d.get_shape().as_list()[0]])

dense = tf.nn.relu(tf.add(tf.matmul(dense, w_d), b_d))

dense = tf.nn.dropout(dense, keep_prob)

w_out = tf.Variable(w_alpha * tf.random_normal([1024, 1 * type_num]))

b_out = tf.Variable(b_alpha * tf.random_normal([1 * type_num]))

out = tf.add(tf.matmul(dense, w_out), b_out)

# out = tf.nn.softmax(out)

return out

训练CNN

# 训练

def train_crack_captcha_cnn():

import time

start_time=time.time()

output = crack_captcha_cnn()

# loss

#loss = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(output, Y))

loss = tf.reduce_mean(tf.nn.sigmoid_cross_entropy_with_logits(logits=output, labels=Y))

# 最后一层用来分类的softmax和sigmoid有什么不同?

# optimizer 为了加快训练 learning_rate应该开始大,然后慢慢衰

optimizer = tf.train.AdamOptimizer(learning_rate=0.001).minimize(loss)

predict = tf.reshape(output, [-1, 1, type_num])

max_idx_p = tf.argmax(predict, 2)

max_idx_l = tf.argmax(tf.reshape(Y, [-1, 1, type_num]), 2)

correct_pred = tf.equal(max_idx_p, max_idx_l)

accuracy = tf.reduce_mean(tf.cast(correct_pred, tf.float32))

saver = tf.train.Saver()

with tf.Session() as sess:

#with tf.device("/gpu:1"):

sess.run(tf.global_variables_initializer())

step = 0

while True:

batch_x, batch_y = get_next_batch(20)

_, loss_ = sess.run([optimizer, loss], feed_dict={X: batch_x, Y: batch_y, keep_prob: 0.75})

print (time.strftime('%Y-%m-%d %H:%M:%S',time.localtime(time.time())),step, loss_)

# 每100 step计算一次准确率

if step % 100 == 0:

batch_x_test, batch_y_test = get_next_batch(20)

acc = sess.run(accuracy, feed_dict={X: batch_x_test, Y: batch_y_test, keep_prob: 1.})

print (u'***************************************************************第%s次的准确率为%s'%(step, acc))

# 如果准确率大于50%,保存模型,完成训练

if acc > 0.95: ##我这里设了0.9,设得越大训练要花的时间越长,如果设得过于接近1,很难达到。如果使用cpu,花的时间很长,cpu占用很高电脑发烫。

logs_train_dir = './model/'

checkpoint_path = os.path.join(logs_train_dir, 'crack_capcha.model')

saver.save(sess, checkpoint_path, global_step=step)

print (time.time()-start_time)

break

step += 1

train_crack_captcha_cnn()

输出结果

019-07-22 02:18:31 298 0.11127849 2019-07-22 02:18:31 299 0.14433172 2019-07-22 02:18:32 300 0.093606435 ***************************************************************第300次的准确率为1.0 226.61262154579163 Process finished with exit code 0

验证模型

验证的过程中,新建一个CNN网络,参数取自刚刚生成的模型

# -*- coding: utf-8 -*-

"""

Created on Wed Aug 29 15:56:38 2018

@author: Administrator

"""

import tensorflow as tf

import os

#import random

import numpy as np

from PIL import Image

import cv2

import matplotlib.pyplot as plt

IMAGE_HEIGHT = 60

IMAGE_WIDTH = 160

type_num = 2

####################################################################

ch_list=['no','yes']

X = tf.placeholder(tf.float32, [None, IMAGE_HEIGHT * IMAGE_WIDTH])

Y = tf.placeholder(tf.float32, [None, 1 * type_num])

keep_prob = tf.placeholder(tf.float32) # dropout

# 定义CNN

def crack_captcha_cnn(w_alpha=0.01, b_alpha=0.1):

x = tf.reshape(X, shape=[-1, IMAGE_HEIGHT, IMAGE_WIDTH, 1])

# w_c1_alpha = np.sqrt(2.0/(IMAGE_HEIGHT*IMAGE_WIDTH)) #

# w_c2_alpha = np.sqrt(2.0/(3*3*32))

# w_c3_alpha = np.sqrt(2.0/(3*3*64))

# w_d1_alpha = np.sqrt(2.0/(8*32*64))

# out_alpha = np.sqrt(2.0/1024)

# 3 conv layer

w_c1 = tf.Variable(w_alpha * tf.random_normal([3, 3, 1, 32]))

b_c1 = tf.Variable(b_alpha * tf.random_normal([32]))

conv1 = tf.nn.relu(tf.nn.bias_add(tf.nn.conv2d(x, w_c1, strides=[1, 1, 1, 1], padding='SAME'), b_c1))

conv1 = tf.nn.max_pool(conv1, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME')

conv1 = tf.nn.dropout(conv1, keep_prob)

w_c2 = tf.Variable(w_alpha * tf.random_normal([3, 3, 32, 64]))

b_c2 = tf.Variable(b_alpha * tf.random_normal([64]))

conv2 = tf.nn.relu(tf.nn.bias_add(tf.nn.conv2d(conv1, w_c2, strides=[1, 1, 1, 1], padding='SAME'), b_c2))

conv2 = tf.nn.max_pool(conv2, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME')

conv2 = tf.nn.dropout(conv2, keep_prob)

w_c3 = tf.Variable(w_alpha * tf.random_normal([3, 3, 64, 64]))

b_c3 = tf.Variable(b_alpha * tf.random_normal([64]))

conv3 = tf.nn.relu(tf.nn.bias_add(tf.nn.conv2d(conv2, w_c3, strides=[1, 1, 1, 1], padding='SAME'), b_c3))

conv3 = tf.nn.max_pool(conv3, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME')

conv3 = tf.nn.dropout(conv3, keep_prob)

# Fully connected layer

w_d = tf.Variable(w_alpha * tf.random_normal([8 * 32 * 40, 1024]))

b_d = tf.Variable(b_alpha * tf.random_normal([1024]))

dense = tf.reshape(conv3, [-1, w_d.get_shape().as_list()[0]])

dense = tf.nn.relu(tf.add(tf.matmul(dense, w_d), b_d))

dense = tf.nn.dropout(dense, keep_prob)

w_out = tf.Variable(w_alpha * tf.random_normal([1024, 1 * type_num]))

b_out = tf.Variable(b_alpha * tf.random_normal([1 * type_num]))

out = tf.add(tf.matmul(dense, w_out), b_out)

# out = tf.nn.softmax(out)

return out

def crack_captcha(captcha_image):

output = crack_captcha_cnn()

saver = tf.train.Saver()

with tf.Session() as sess:

model_file=tf.train.latest_checkpoint('./model/')

saver.restore(sess, model_file)

predict = tf.argmax(tf.reshape(output, [-1, 1, type_num]), 2)

text_list = sess.run(predict, feed_dict={X: [captcha_image], keep_prob: 1})

text = text_list[0].tolist()

return text[0]

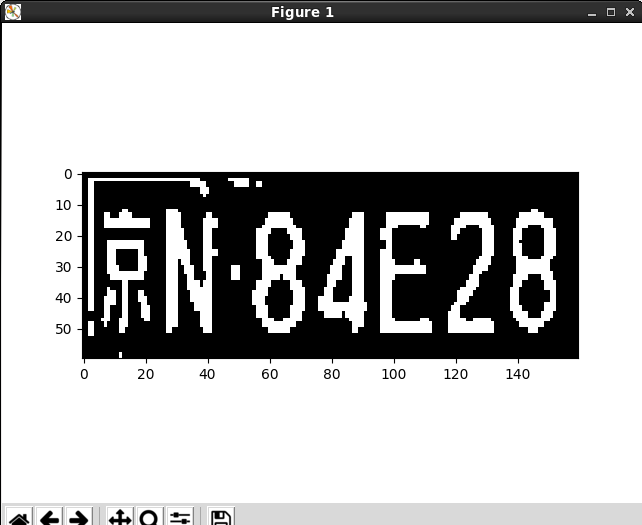

pathname='2.png'

img=Image.open(pathname).convert("RGB")

#转换为高60宽160的统一格式

img = img.resize((IMAGE_WIDTH, IMAGE_HEIGHT))

img = np.array(img)

# 灰度

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

# OTUS二值化

ret3, th3 = cv2.threshold(gray , 0, 255, cv2.THRESH_BINARY + cv2.THRESH_OTSU)

plate_image=np.array(th3)

plt.imshow(plate_image,cmap='Greys_r')

plt.show()

image = plate_image.flatten() / 255

predict_text = ch_list[crack_captcha(image)]

print(predict_text)

tf.reset_default_graph()

输出:

Use standard file APIs to check for files with this prefix. 2019-07-22 02:50:42.435730: W tensorflow/compiler/jit/mark_for_compilation_pass.cc:1412] (One-time warning): Not using XLA:CPU for cluster because envvar TF_XLA_FLAGS=--tf_xla_cpu_global_jit was not set. If you want XLA:CPU, either set that envvar, or use experimental_jit_scope to enable XLA:CPU. To confirm that XLA is active, pass --vmodule=xla_compilation_cache=1 (as a proper command-line flag, not via TF_XLA_FLAGS) or set the envvar XLA_FLAGS=--xla_hlo_profile. W0722 02:50:43.226647 139788508870400 deprecation_wrapper.py:119] From /home/hadoop/PycharmProjects/tf_plate/test_yes_or_no.py:99: The name tf.reset_default_graph is deprecated. Please use tf.compat.v1.reset_default_graph instead. yes Process finished with exit co

结果中的yes表示识别出该图片是一张车牌

切割车牌并筛选

(车牌)字符定位切割、识别

需要导入的库与前导函数、变量

import cv2 as cv

import numpy as np

from PIL import Image

import matplotlib.pyplot as plt

import tensorflow as tf

IMAGE_HEIGHT = 60

IMAGE_WIDTH = 160

def plate_pretreat(img):

"""车牌预处理—灰度,二值化,腐蚀,用于字母矩形定位"""

# 灰度

gray = cv.cvtColor(img, cv.COLOR_BGR2GRAY)

# 高斯滤波

blur = cv.GaussianBlur(gray, (5, 5), 0)

#自适应阈值二值化

ret3, th3 = cv.threshold(blur , 0, 255, cv.THRESH_BINARY + cv.THRESH_OTSU)

#设置膨胀腐蚀核,矩形 1x1,也可以设置为 2x2

kernel = cv.getStructuringElement(cv.MORPH_RECT,(1, 1))

# 图像腐蚀

erode = cv.erode(th3 , kernel)

erode_image=np.array(erode)

return erode_image

def plate_gray(img):

"""车牌预处理—灰度,二值化,腐蚀,用于字母切割"""

# 灰度

gray = cv.cvtColor(img, cv.COLOR_BGR2GRAY)

# 高斯滤波

blur = cv.GaussianBlur(gray, (5, 5), 0)

#自适应阈值二值化

ret3, th3 = cv.threshold(blur , 0, 255, cv.THRESH_BINARY + cv.THRESH_OTSU)

return th3

车牌中文识别模型训练(单独新建一个训练)

中文字符切割好的照片存在CNN_ch目录下,下同

import cv2

import numpy as np

import matplotlib.pyplot as plt

from PIL import Image

import os

import random

import tensorflow as tf

#我们将所有输入的图片,高度统一为60,宽度统一为160

IMAGE_HEIGHT = 60

IMAGE_WIDTH = 160

type_num = 31

#随机得到图片(转矩阵)以及标签:输入-文件夹地址

def get_plate(file_dir):

path,label = RandomDir(file_dir)

filelist=os.listdir(path)

index=random.randint(0, len(filelist)-1)

filename=filelist[index]

file_path = os.path.join(path, filename)

img=Image.open(file_path).convert("RGB")

#转换为高60宽160的统一格式

img = img.resize((IMAGE_WIDTH, IMAGE_HEIGHT))

img = np.array(img)

# 灰度

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

# OTUS二值化

ret3, th3 = cv2.threshold(gray , 0, 255, cv2.THRESH_BINARY + cv2.THRESH_OTSU)

plate_image=np.array(th3)

return label,plate_image

# 生成一个训练batch

def get_next_batch(batch_size=20):

batch_x = np.zeros([batch_size, IMAGE_HEIGHT * IMAGE_WIDTH])

batch_y = np.zeros([batch_size, 1 * type_num])

# 有时生成图像大小不是(60, 160, 3)

def wrap_gen_captcha_text_and_image():

#while True:

text, image = get_plate('/home/hadoop/PycharmProjects/tf_plate/CNNch/')

#if image.shape == (60, 160, 3):

return text, image

for i in range(batch_size):

text, image = wrap_gen_captcha_text_and_image()

#image = convert2gray(image)

batch_x[i, :] = image.flatten() / 255 # (image.flatten()-128)/128 mean为0

vector = np.zeros(1 * type_num)

dirlist=os.listdir('/home/hadoop/PycharmProjects/tf_plate/CNNch/')

if text in dirlist:

vector[dirlist.index(text)]=1

batch_y[i, :] = vector

return batch_x, batch_y

def RandomDir(rootDir):

filelist=os.listdir(rootDir)

index=random.randint(0, len(filelist)-1)

filename=filelist[index]

filepath = os.path.join(rootDir, filename)

return filepath,filename

X = tf.placeholder(tf.float32, [None, IMAGE_HEIGHT * IMAGE_WIDTH])

Y = tf.placeholder(tf.float32, [None, 1 * type_num])

keep_prob = tf.placeholder(tf.float32) # dropout

# 定义CNN

def crack_captcha_cnn(w_alpha=0.01, b_alpha=0.1):

x = tf.reshape(X, shape=[-1, IMAGE_HEIGHT, IMAGE_WIDTH, 1])

# w_c1_alpha = np.sqrt(2.0/(IMAGE_HEIGHT*IMAGE_WIDTH)) #

# w_c2_alpha = np.sqrt(2.0/(3*3*32))

# w_c3_alpha = np.sqrt(2.0/(3*3*64))

# w_d1_alpha = np.sqrt(2.0/(8*32*64))

# out_alpha = np.sqrt(2.0/1024)

# 3 conv layer

w_c1 = tf.Variable(w_alpha * tf.random_normal([3, 3, 1, 32]))

b_c1 = tf.Variable(b_alpha * tf.random_normal([32]))

conv1 = tf.nn.relu(tf.nn.bias_add(tf.nn.conv2d(x, w_c1, strides=[1, 1, 1, 1], padding='SAME'), b_c1))

conv1 = tf.nn.max_pool(conv1, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME')

conv1 = tf.nn.dropout(conv1, keep_prob)

w_c2 = tf.Variable(w_alpha * tf.random_normal([3, 3, 32, 64]))

b_c2 = tf.Variable(b_alpha * tf.random_normal([64]))

conv2 = tf.nn.relu(tf.nn.bias_add(tf.nn.conv2d(conv1, w_c2, strides=[1, 1, 1, 1], padding='SAME'), b_c2))

conv2 = tf.nn.max_pool(conv2, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME')

conv2 = tf.nn.dropout(conv2, keep_prob)

w_c3 = tf.Variable(w_alpha * tf.random_normal([3, 3, 64, 64]))

b_c3 = tf.Variable(b_alpha * tf.random_normal([64]))

conv3 = tf.nn.relu(tf.nn.bias_add(tf.nn.conv2d(conv2, w_c3, strides=[1, 1, 1, 1], padding='SAME'), b_c3))

conv3 = tf.nn.max_pool(conv3, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME')

conv3 = tf.nn.dropout(conv3, keep_prob)

# Fully connected layer

w_d = tf.Variable(w_alpha * tf.random_normal([8 * 32 * 40, 1024]))

b_d = tf.Variable(b_alpha * tf.random_normal([1024]))

dense = tf.reshape(conv3, [-1, w_d.get_shape().as_list()[0]])

dense = tf.nn.relu(tf.add(tf.matmul(dense, w_d), b_d))

dense = tf.nn.dropout(dense, keep_prob)

w_out = tf.Variable(w_alpha * tf.random_normal([1024, 1 * type_num]))

b_out = tf.Variable(b_alpha * tf.random_normal([1 * type_num]))

out = tf.add(tf.matmul(dense, w_out), b_out)

# out = tf.nn.softmax(out)

return out

# 训练

def train_crack_captcha_cnn():

import time

start_time=time.time()

output = crack_captcha_cnn()

# loss

#loss = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(output, Y))

loss = tf.reduce_mean(tf.nn.sigmoid_cross_entropy_with_logits(logits=output, labels=Y))

# 最后一层用来分类的softmax和sigmoid有什么不同?

# optimizer 为了加快训练 learning_rate应该开始大,然后慢慢衰

optimizer = tf.train.AdamOptimizer(learning_rate=0.001).minimize(loss)

predict = tf.reshape(output, [-1, 1, type_num])

max_idx_p = tf.argmax(predict, 2)

max_idx_l = tf.argmax(tf.reshape(Y, [-1, 1, type_num]), 2)

correct_pred = tf.equal(max_idx_p, max_idx_l)

accuracy = tf.reduce_mean(tf.cast(correct_pred, tf.float32))

saver = tf.train.Saver()

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

step = 0

while True:

batch_x, batch_y = get_next_batch(20)

_, loss_ = sess.run([optimizer, loss], feed_dict={X: batch_x, Y: batch_y, keep_prob: 0.75})

print (time.strftime('%Y-%m-%d %H:%M:%S',time.localtime(time.time())),step, loss_)

# 每100 step计算一次准确率

if step % 100 == 0:

batch_x_test, batch_y_test = get_next_batch(20)

acc = sess.run(accuracy, feed_dict={X: batch_x_test, Y: batch_y_test, keep_prob: 1.})

print (u'***************************************************************第%s次的准确率为%s'%(step, acc))

# 如果准确率大于50%,保存模型,完成训练

if acc > 0.95: ##我这里设了0.9,设得越大训练要花的时间越长,如果设得过于接近1,很难达到。如果使用cpu,花的时间很长,cpu占用很高电脑发烫。

logs_train_dir = '/home/hadoop/PycharmProjects/tf_plate/model_ch/'

checkpoint_path = os.path.join(logs_train_dir, 'crack_capcha.model')

saver.save(sess, checkpoint_path, global_step=step)

print (time.time()-start_time)

break

step += 1

train_crack_captcha_cnn()

输出结果:

2019-07-22 03:28:48 898 0.016501004 2019-07-22 03:28:48 899 0.015574927 2019-07-22 03:28:49 900 0.011108566 ***************************************************************第900次的准确率为1.0 566.4421260356903

车牌英文、数字识别模型训练(单独新建一个训练)

import cv2

import numpy as np

import matplotlib.pyplot as plt

from PIL import Image

import os

import random

import tensorflow as tf

#我们将所有输入的图片,高度统一为60,宽度统一为160

IMAGE_HEIGHT = 60

IMAGE_WIDTH = 160

type_num = 34

#随机得到图片(转矩阵)以及标签:输入-文件夹地址

def get_plate(file_dir):

path,label = RandomDir(file_dir)

filelist=os.listdir(path)

index=random.randint(0, len(filelist)-1)

filename=filelist[index]

file_path = os.path.join(path, filename)

img=Image.open(file_path).convert("RGB")

#转换为高60宽160的统一格式

img = img.resize((IMAGE_WIDTH, IMAGE_HEIGHT))

img = np.array(img)

# 灰度

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

# OTUS二值化

ret3, th3 = cv2.threshold(gray , 0, 255, cv2.THRESH_BINARY + cv2.THRESH_OTSU)

plate_image=np.array(th3)

return label,plate_image

# 生成一个训练batch

def get_next_batch(batch_size=20):

batch_x = np.zeros([batch_size, IMAGE_HEIGHT * IMAGE_WIDTH])

batch_y = np.zeros([batch_size, 1 * type_num])

# 有时生成图像大小不是(60, 160, 3)

def wrap_gen_captcha_text_and_image():

#while True:

text, image = get_plate('/home/hadoop/PycharmProjects/tf_plate/CNN_other/')

#if image.shape == (60, 160, 3):

return text, image

for i in range(batch_size):

text, image = wrap_gen_captcha_text_and_image()

#image = convert2gray(image)

batch_x[i, :] = image.flatten() / 255 # (image.flatten()-128)/128 mean为0

vector = np.zeros(1 * type_num)

dirlist=os.listdir('/home/hadoop/PycharmProjects/tf_plate/CNN_other/')

if text in dirlist:

vector[dirlist.index(text)]=1

batch_y[i, :] = vector

return batch_x, batch_y

def RandomDir(rootDir):

filelist=os.listdir(rootDir)

index=random.randint(0, len(filelist)-1)

filename=filelist[index]

filepath = os.path.join(rootDir, filename)

return filepath,filename

X = tf.placeholder(tf.float32, [None, IMAGE_HEIGHT * IMAGE_WIDTH])

Y = tf.placeholder(tf.float32, [None, 1 * type_num])

keep_prob = tf.placeholder(tf.float32) # dropout

# 定义CNN

def crack_captcha_cnn(w_alpha=0.01, b_alpha=0.1):

x = tf.reshape(X, shape=[-1, IMAGE_HEIGHT, IMAGE_WIDTH, 1])

# w_c1_alpha = np.sqrt(2.0/(IMAGE_HEIGHT*IMAGE_WIDTH)) #

# w_c2_alpha = np.sqrt(2.0/(3*3*32))

# w_c3_alpha = np.sqrt(2.0/(3*3*64))

# w_d1_alpha = np.sqrt(2.0/(8*32*64))

# out_alpha = np.sqrt(2.0/1024)

# 3 conv layer

w_c1 = tf.Variable(w_alpha * tf.random_normal([3, 3, 1, 32]))

b_c1 = tf.Variable(b_alpha * tf.random_normal([32]))

conv1 = tf.nn.relu(tf.nn.bias_add(tf.nn.conv2d(x, w_c1, strides=[1, 1, 1, 1], padding='SAME'), b_c1))

conv1 = tf.nn.max_pool(conv1, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME')

conv1 = tf.nn.dropout(conv1, keep_prob)

w_c2 = tf.Variable(w_alpha * tf.random_normal([3, 3, 32, 64]))

b_c2 = tf.Variable(b_alpha * tf.random_normal([64]))

conv2 = tf.nn.relu(tf.nn.bias_add(tf.nn.conv2d(conv1, w_c2, strides=[1, 1, 1, 1], padding='SAME'), b_c2))

conv2 = tf.nn.max_pool(conv2, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME')

conv2 = tf.nn.dropout(conv2, keep_prob)

w_c3 = tf.Variable(w_alpha * tf.random_normal([3, 3, 64, 64]))

b_c3 = tf.Variable(b_alpha * tf.random_normal([64]))

conv3 = tf.nn.relu(tf.nn.bias_add(tf.nn.conv2d(conv2, w_c3, strides=[1, 1, 1, 1], padding='SAME'), b_c3))

conv3 = tf.nn.max_pool(conv3, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME')

conv3 = tf.nn.dropout(conv3, keep_prob)

# Fully connected layer

w_d = tf.Variable(w_alpha * tf.random_normal([8 * 32 * 40, 1024]))

b_d = tf.Variable(b_alpha * tf.random_normal([1024]))

dense = tf.reshape(conv3, [-1, w_d.get_shape().as_list()[0]])

dense = tf.nn.relu(tf.add(tf.matmul(dense, w_d), b_d))

dense = tf.nn.dropout(dense, keep_prob)

w_out = tf.Variable(w_alpha * tf.random_normal([1024, 1 * type_num]))

b_out = tf.Variable(b_alpha * tf.random_normal([1 * type_num]))

out = tf.add(tf.matmul(dense, w_out), b_out)

# out = tf.nn.softmax(out)

return out

# 训练

def train_crack_captcha_cnn():

import time

start_time=time.time()

output = crack_captcha_cnn()

# loss

#loss = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(output, Y))

loss = tf.reduce_mean(tf.nn.sigmoid_cross_entropy_with_logits(logits=output, labels=Y))

# 最后一层用来分类的softmax和sigmoid有什么不同?

# optimizer 为了加快训练 learning_rate应该开始大,然后慢慢衰

optimizer = tf.train.AdamOptimizer(learning_rate=0.001).minimize(loss)

predict = tf.reshape(output, [-1, 1, type_num])

max_idx_p = tf.argmax(predict, 2)

max_idx_l = tf.argmax(tf.reshape(Y, [-1, 1, type_num]), 2)

correct_pred = tf.equal(max_idx_p, max_idx_l)

accuracy = tf.reduce_mean(tf.cast(correct_pred, tf.float32))

saver = tf.train.Saver()

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

step = 0

while True:

batch_x, batch_y = get_next_batch(20)

_, loss_ = sess.run([optimizer, loss], feed_dict={X: batch_x, Y: batch_y, keep_prob: 0.75})

print (time.strftime('%Y-%m-%d %H:%M:%S',time.localtime(time.time())),step, loss_)

# 每100 step计算一次准确率

if step % 100 == 0:

batch_x_test, batch_y_test = get_next_batch(20)

acc = sess.run(accuracy, feed_dict={X: batch_x_test, Y: batch_y_test, keep_prob: 1.})

print (u'***************************************************************第%s次的准确率为%s'%(step, acc))

# 如果准确率大于95%,保存模型,完成训练

if acc > 0.95: ##我这里设了0.95,设得越大训练要花的时间越长,如果设得过于接近1,很难达到。如果使用cpu,花的时间很长,cpu占用很高电脑发烫。

logs_train_dir = '/home/hadoop/PycharmProjects/tf_plate/model_num/'

checkpoint_path = os.path.join(logs_train_dir, 'crack_capcha.model')

saver.save(sess, checkpoint_path, global_step=step)

print (time.time()-start_time)

break

step += 1

train_crack_captcha_cnn()

结果:

019-07-22 04:49:58 498 0.014593856 2019-07-22 04:49:59 499 0.012750196 2019-07-22 04:50:00 500 0.01011668 ***************************************************************第500次的准确率为1.0 869.82888007164 Process finished with exit code 0

验证英文和数字

# -*- coding: utf-8 -*-

"""

Created on Wed Aug 29 15:56:38 2018

@author: Administrator

"""

import tensorflow as tf

import os

#import random

import numpy as np

from PIL import Image

import cv2

import matplotlib.pyplot as plt

IMAGE_HEIGHT = 60

IMAGE_WIDTH = 160

type_num = 34

####################################################################

ch_list=['0','1','2','3','4','5','6','7','8','9','A','B','C','D','E','F','G','H','J','K','L','M','N','P','Q','R','S','T','U','V','W','X','Y','Z']

X = tf.placeholder(tf.float32, [None, IMAGE_HEIGHT * IMAGE_WIDTH])

Y = tf.placeholder(tf.float32, [None, 1 * type_num])

keep_prob = tf.placeholder(tf.float32) # dropout

# 定义CNN

def crack_captcha_cnn(w_alpha=0.01, b_alpha=0.1):

x = tf.reshape(X, shape=[-1, IMAGE_HEIGHT, IMAGE_WIDTH, 1])

# w_c1_alpha = np.sqrt(2.0/(IMAGE_HEIGHT*IMAGE_WIDTH)) #

# w_c2_alpha = np.sqrt(2.0/(3*3*32))

# w_c3_alpha = np.sqrt(2.0/(3*3*64))

# w_d1_alpha = np.sqrt(2.0/(8*32*64))

# out_alpha = np.sqrt(2.0/1024)

# 3 conv layer

w_c1 = tf.Variable(w_alpha * tf.random_normal([3, 3, 1, 32]))

b_c1 = tf.Variable(b_alpha * tf.random_normal([32]))

conv1 = tf.nn.relu(tf.nn.bias_add(tf.nn.conv2d(x, w_c1, strides=[1, 1, 1, 1], padding='SAME'), b_c1))

conv1 = tf.nn.max_pool(conv1, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME')

conv1 = tf.nn.dropout(conv1, keep_prob)

w_c2 = tf.Variable(w_alpha * tf.random_normal([3, 3, 32, 64]))

b_c2 = tf.Variable(b_alpha * tf.random_normal([64]))

conv2 = tf.nn.relu(tf.nn.bias_add(tf.nn.conv2d(conv1, w_c2, strides=[1, 1, 1, 1], padding='SAME'), b_c2))

conv2 = tf.nn.max_pool(conv2, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME')

conv2 = tf.nn.dropout(conv2, keep_prob)

w_c3 = tf.Variable(w_alpha * tf.random_normal([3, 3, 64, 64]))

b_c3 = tf.Variable(b_alpha * tf.random_normal([64]))

conv3 = tf.nn.relu(tf.nn.bias_add(tf.nn.conv2d(conv2, w_c3, strides=[1, 1, 1, 1], padding='SAME'), b_c3))

conv3 = tf.nn.max_pool(conv3, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME')

conv3 = tf.nn.dropout(conv3, keep_prob)

# Fully connected layer

w_d = tf.Variable(w_alpha * tf.random_normal([8 * 32 * 40, 1024]))

b_d = tf.Variable(b_alpha * tf.random_normal([1024]))

dense = tf.reshape(conv3, [-1, w_d.get_shape().as_list()[0]])

dense = tf.nn.relu(tf.add(tf.matmul(dense, w_d), b_d))

dense = tf.nn.dropout(dense, keep_prob)

w_out = tf.Variable(w_alpha * tf.random_normal([1024, 1 * type_num]))

b_out = tf.Variable(b_alpha * tf.random_normal([1 * type_num]))

out = tf.add(tf.matmul(dense, w_out), b_out)

# out = tf.nn.softmax(out)

return out

def crack_captcha(captcha_image):

output = crack_captcha_cnn()

saver = tf.train.Saver()

with tf.Session() as sess:

model_file=tf.train.latest_checkpoint('./model_num/')

saver.restore(sess, model_file)

predict = tf.argmax(tf.reshape(output, [-1, 1, type_num]), 2)

text_list = sess.run(predict, feed_dict={X: [captcha_image], keep_prob: 1})

text = text_list[0].tolist()

return text[0]

pathname='.png'

img=Image.open(pathname).convert("RGB")

#转换为高60宽160的统一格式

img = img.resize((IMAGE_WIDTH, IMAGE_HEIGHT))

img = np.array(img)

# 灰度

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

# OTUS二值化

ret3, th3 = cv2.threshold(gray , 0, 255, cv2.THRESH_BINARY + cv2.THRESH_OTSU)

plate_image=np.array(th3)

plt.imshow(plate_image,cmap='Greys_r')

plt.show()

image = plate_image.flatten() / 255

predict_text = ch_list[crack_captcha(image)]

print(predict_text)

tf.reset_default_graph()

文档来源:2019山大培训

文档地址:

链接:https://pan.baidu.com/s/1t-GWSCdr92bYr2m2InIkDw 提取码:wji3 复制这段内容后打开百度网盘手机App,操作更方便哦