采集分析Discuz论坛上的数据

来自CloudWiki

201708010242(讨论 | 贡献)2018年11月19日 (一) 10:01的版本

.

目标环境

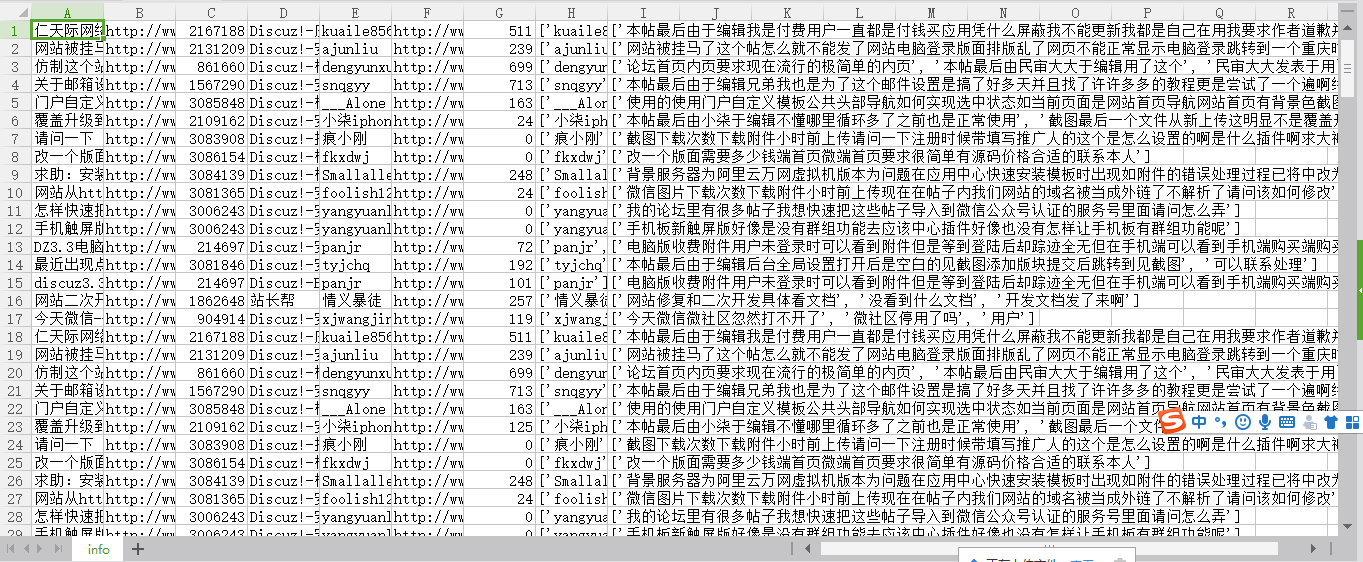

- Discuz!是目前国内知名的开源 php 社交系统。它的基础架构采用 PHP+MySQL 实现;适用于各种服务器环境的高效论坛系统。直接访问目标站点 ip 即可进入论坛主页。论坛的默认模块包含 5800+条主 题帖及 1700+条回复帖,共计 7500+条有效回复内容;包含 550+会员。其中涉及到的信息包含:论坛版块、发帖人、回帖人、发帖人 ID、发帖人 名称、回帖人 ID、回帖人名称、用户头像、发帖内容、回帖内容、发帖 ID、回 帖 ID 等。

逻辑图

逻辑关系为: (一)论坛版块对应多个帖子 (二)用户对应多个发帖 (三)用户对应多个回帖 (四)发帖对应多个回帖 (五)发帖包含:发帖 id、发帖标题、发帖内容、发帖人 id (六)回帖包含:发帖 id、回帖 id、回帖内容、回帖人 id (七)用户包含:用户 id、名称、头像

操作方法

代码实现

import requests

from bs4 import BeautifulSoup

from urllib.parse import urlparse

from urllib.parse import parse_qs

import re

import csv

# 获取网页源代码

def get_url_content(page):

url = 'http://www.discuz.net/forum.php?mod=guide&view=new&page='+page

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.102 Safari/537.36'}

response = requests.get(url, headers=headers)

if response.status_code == 200:

if "抱歉,指定的主题不存在或已被删除或正在被审核" in response.text:

return False

else:

return response.text

else:

return False

def parse_post_data(html_text):

soup_object = BeautifulSoup(html_text, 'lxml')

newlist = soup_object.select('.bm_c table tbody tr')

for new in newlist:

try:

title = new.select('.common a')[0].text

url = 'http://www.discuz.net/' + new.select('.common a')[0]['href']

detail = get_url_deatil_content(url)

plate = new.select('.by')[0].select('a')[0].text

author = new.select('.by')[1].select('a')[0].text

authorHome = 'http://www.discuz.net/' + new.select('.by')[1].select('cite a')[0]['href']

parsed_url = urlparse(authorHome)

query_string_object = parse_qs(parsed_url.query)

uid = query_string_object['uid'][0]

commNum = new.select('.num em')[0].text

post_content_info = {

'title': title,

'url': url,

'uid': uid,

'plate': plate,

'author': author,

'authorHome': authorHome,

'commNum': commNum

}

yield post_content_info

except:

pass

def get_url_deatil_content(url):

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.102 Safari/537.36'}

response = requests.get(url, headers=headers)

if response.status_code == 200:

return response.text

else:

return 'error'

def parse_deatil_content(detail):

soup = BeautifulSoup(detail, 'lxml')

commList = soup.select('.t_f')

userList = soup.select('.authi a.xw1')

userArr = []

commArr = []

for i in range(len(userList)):

auth = userList[i].text

userArr.append(auth)

for i in range(len(commList)):

comm = commList[i].text

comm = re.sub(r'[^\u4e00-\u9fa5]+', '', comm)

commArr.append(comm)

return [userArr, commArr]

def save_csv(line):

with open('info.csv','w',newline='') as f:

writer = csv.writer(f)

writer.writerow(line)

def main():

f = open('info.csv', 'w', newline='')

writer = csv.writer(f)

for page in range(12):

html = get_url_content(str(page))

data = parse_post_data(html)

for info in data:

dataUrl = info['url']

nextHtml = get_url_deatil_content(dataUrl)

user, comm = parse_deatil_content(nextHtml)

info['user'] = user

info['comm'] = comm

line = []

for k in info:

line.append(str(info[k]))

print(line)

writer.writerow(line)

f.close()

if __name__ == '__main__':

main()